Multimodal Fusion Detection of UAV Target Based on Siamese Network

-

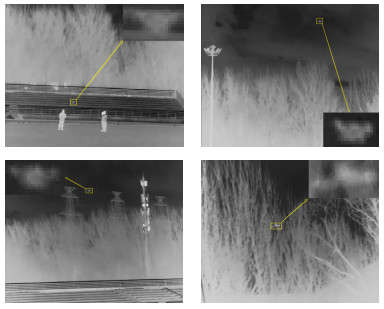

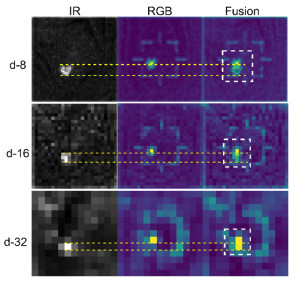

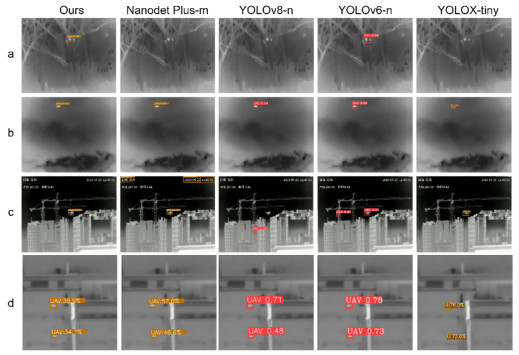

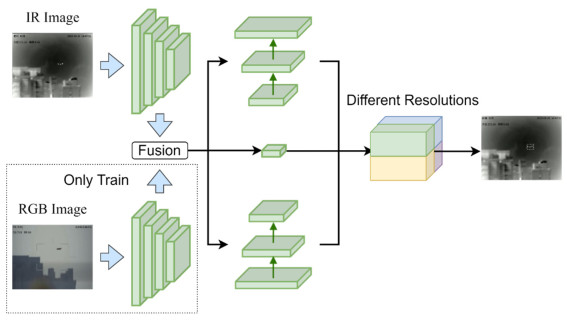

摘要: 为解决小型无人机“黑飞”对公共领域的威胁问题。基于无人机目标多模态图像信息,文中提出一种轻量化多模态自适应融合孪生网络(Multimodal adaptive fusion Siamese network,MAFS)。设计一种全新的自适应融合策略,该模块通过定义两个模型训练参数赋予不同模态权重以实现自适应融合;本文在Ghost PAN基础上进行结构重建,构建一种更适合无人机目标检测的金字塔融合结构。消融实验结果表明本文算法各个模块对无人机目标检测精度均有提升,多算法对比实验结果表明本文算法鲁棒性更强,与Nanodet Plus-m相比检测时间基本不变的情况下mAP提升9%。Abstract: To address the threat of small drones "black flying" to the public domain. Based on the multimodal image information of an unmanned aerial vehicle (UAV) target, a lightweight multimodal adaptive fusion Siamese network is proposed in this paper. To design a new adaptive fusion strategy, this module assigns different modal weights by defining two model training parameters to achieve adaptive fusion. The structure is reconstructed on the basis of a Ghost PAN, and a pyramid fusion structure more suitable for UAV target detection is constructed. The results of ablation experiments show that each module of the algorithm in this study can improve the detection accuracy of the UAV targets. Multi-algorithm comparison experiments demonstrated the robustness of the algorithm. The mAP increased by 9% when the detection time was basically unchanged.

-

Keywords:

- UAV /

- lightweight /

- Siamese network /

- adaptive fusion strategy /

- multimodal image

-

-

表 1 编码器组成结构

Table 1 Encoder composition

Layer Output size Channel Image 416×416 3 Conv1 208×208 24 MaxPool 104×104 Stage2 52×52 116 Stage3 26×26 232 Stage4 13×13 464 表 2 实验配置

Table 2 Experimental configuration

Parameters Configuration Operating system Ubuntu 20.04 RAM 32G CPU Intel core i5 12400 GPU Geforce RTX 3060 GPU acceleration environment CUDA11.3 Training framework Pytorch 表 3 算法实现的具体参数配置

Table 3 Specific parameter configuration for algorithm implementation

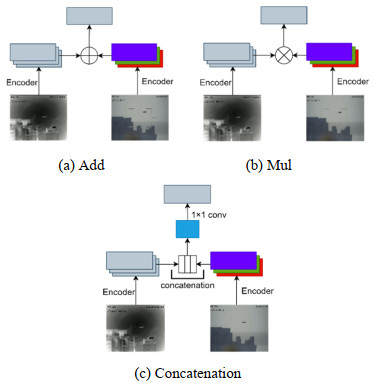

Parameters Configuration Model MAFSnet Training rounds 100 Batch size 32 Optimizer SGD 表 4 多模态融合策略

Table 4 Multimodal fusion strategy

Fusion Strategy AP50/% mAP/% Add 83.00 38.26 Mul 75.17 31.86 Cat 66.32 25.91 表 5 多模态融合的权重因子

Table 5 Weighting factors for multimodal fusion

μ λ AP50/% mAP/% 80.60 36.09 √ 80.71 36.69 √ 81.69 37.59 √ √ 83.00 38.26 表 6 损失函数

Table 6 Loss function

GFLoss GIoULoss AP50/% mAP/% √ 80.83 37.24 √ 78.17 36.87 √ √ 83.00 38.26 表 7 改进Ghost PAN的消融实验结果

Table 7 Improving the ablation experiment results of Ghost PAN

Model AP50/% mAP/% Flops/G Params/M Latency/s Nanodet Plus- Ghost PAN 79.10 35.14 0.757 4.164 0.0082 MAFSnet-Ghost PAN 79.66 36.26 0.757 4.164 0.0082 MAFSnet -our PAN 80.35 37.79 0.823 4.201 0.0086 MAFSnet-our PAN* 83.00 38.26 0.895 4.397 0.0090 表 8 不同算法结果对比

Table 8 Comparison of the results of different algorithms

Model Input shape AP50/% mAP/% Flops/G Params/M Latency/s YOLOv6-n 640×640 82.44 38.00 2.379 4.63 0.0068 YOLOX-tiny 416×416 82.70 36.52 3.199 5.033 0.0055 YOLOv8-n 640×640 81.99 37.52 1.72 3.011 0.0062 Nanodet Plus-m 416×416 79.1 35.1 0.757 4.164 0.0082 Ours 416×416 83.00 38.26 0.895 4.397 0.0090 -

[1] 张辰, 赵红颖, 钱旭. 面向无人机影像的目标特征跟踪方法研究[J]. 红外技术, 2015, 37(3): 224-228, 239. http://hwjs.nvir.cn/article/id/hwjs201503010 ZHANG Chen, ZHAO Hongying, QIAN Xu. Research on Target Feature Tracking Method for UAV Images[J]. Infrared Technology, 2015, 37(3): 224-228, 239. http://hwjs.nvir.cn/article/id/hwjs201503010

[2] 王宁, 李哲, 梁晓龙, 等. 无人机单载荷目标检测及定位联合实现方法[J]. 电光与控制, 2021, 28(11): 94-100. https://www.cnki.com.cn/Article/CJFDTOTAL-DGKQ202111021.htm WANG Ning, LI Zhe, LIANG Xiaolong, et al. Joint realization method of single payload target detection and positioning of UAV[J]. Electro-optic and Control, 2021, 28(11): 94-100. https://www.cnki.com.cn/Article/CJFDTOTAL-DGKQ202111021.htm

[3] 杨欣, 王刚, 李椋, 等. 基于深度卷积神经网络的小型民用无人机检测研究进展[J]. 红外技术, 2022, 44(11): 1119-1131. http://hwjs.nvir.cn/article/id/f016be54-e981-4314-b634-7c05912eb61e YANG Xin, WANG Gang, LI Liang, et al. Research progress in detection of small civilian UAVs based on deep convolutional neural networks [J]. Infrared Technology, 2022, 44(11): 1119-1131. http://hwjs.nvir.cn/article/id/f016be54-e981-4314-b634-7c05912eb61e

[4] 粟宇路, 苏俊波, 范益红, 等. 红外中长波图像彩色融合方法研究[J]. 红外技术, 2019, 41(4): 335-340. http://hwjs.nvir.cn/article/id/hwjs201904007 SU Yulu, SU Junbo, FAN Yihong, et al. Research on color fusion method of infrared medium and long wave images [J]. Infrared Technology, 2019, 41(4): 335-340. http://hwjs.nvir.cn/article/id/hwjs201904007

[5] 陈旭, 彭冬亮, 谷雨. 基于改进YOLOv5s的无人机图像实时目标检测[J]. 光电工程, 2022, 49(3): 69-81. https://www.cnki.com.cn/Article/CJFDTOTAL-GDGC202203006.htm CHEN Xu, PENG Dongliang, GU Yu. Real-time target detection of UAV images based on improved YOLOv5s [J]. Optoelectronic Engineering, 2022, 49(3): 69-81. https://www.cnki.com.cn/Article/CJFDTOTAL-GDGC202203006.htm

[6] Redmon J, Farhadi A. YOLO9000: Better, Faster, Stronger[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017: 6517-6525.

[7] Bertinetto L, Valmadre J, Henriques J F, et al. Fully-convolutional siamese networks for object tracking[J/OL]. Computer Vision and Pattern Recognition, 2016. https://arxiv.org/abs/1606.09549

[8] 闫号, 戴佳佳, 龚小溪, 等. 基于多源图像融合的光伏面板缺陷检测[J]. 红外技术, 2023, 45(5): 488-497. http://hwjs.nvir.cn/article/id/9de7d764-d0af-4af8-9eb1-a1b94186c243 YAN Hao, DAI Jiajia, GONG Xiaoxi, et al. Photovoltaic panel defect detection based on multi-source image fusion [J]. Infrared Technology, 2023, 45(5): 488-497. http://hwjs.nvir.cn/article/id/9de7d764-d0af-4af8-9eb1-a1b94186c243

[9] MA Jiayi, MA Yong, LI Chang. Infrared and visible image fusion methods and applications: A survey[J]. Information Fusion, 2019, 45: 153-178.

[10] 白玉, 侯志强, 刘晓义, 等. 基于可见光图像和红外图像决策级融合的目标检测算法[J]. 空军工程大学学报: 自然科学版, 2020, 21(6): 53-59, 100. https://www.cnki.com.cn/Article/CJFDTOTAL-KJGC202006009.htm BAI Yu, HOU Zhiqiang, LIU Xiaoyi, et al. Target detection algorithm based on decision-level fusion of visible light images and infrared images [J]. Journal of Air Force Engineering University: Natural Science Edition, 2020, 21(6): 53-59, 100. https://www.cnki.com.cn/Article/CJFDTOTAL-KJGC202006009.htm

[11] 宁大海, 郑晟. 可见光和红外图像决策级融合目标检测算法[J]. 红外技术, 2023, 45(3): 282-291. http://hwjs.nvir.cn/article/id/5340b616-c317-4372-9776-a7c81ca2c729 NING Dahai, ZHENG Sheng. Decision-level Fusion Object Detection Algorithm for Visible and Infrared Images[J]. Infrared Technology, 2023, 45(3): 282-291. http://hwjs.nvir.cn/article/id/5340b616-c317-4372-9776-a7c81ca2c729

[12] 马野, 吴振宇, 姜徐. 基于红外图像与可见光图像特征融合的目标检测算法[J]. 导弹与航天运载技术, 2022(5): 83-87. https://www.cnki.com.cn/Article/CJFDTOTAL-DDYH202205016.htm MA Ye, WU Zhenyu, JIANG Xu. Target detection algorithm based on feature fusion of infrared image and visible light image [J]. Missile and Space Vehicle Technology, 2022(5): 83-87. https://www.cnki.com.cn/Article/CJFDTOTAL-DDYH202205016.htm

[13] 刘建华, 尹国富, 黄道杰. 基于特征融合的可见光与红外图像目标检测[J]. 激光与红外, 2023, 53(3): 394-401. https://www.cnki.com.cn/Article/CJFDTOTAL-JGHW202303010.htm LIU Jianhua, YIN Guofu, HUANG Daojie. Object detection in visible and infrared images based on feature fusion [J]. Laser and Infrared, 2023, 53(3): 394-401. https://www.cnki.com.cn/Article/CJFDTOTAL-JGHW202303010.htm

[14] 解宇敏, 张浪文, 余孝源, 等. 可见光-红外特征交互与融合的YOLOv5目标检测算法[J/OL]. 控制理论与应用, http://kns.cnki.net/kcms/detail/44.1240.TP.20230511.1643.024.html. XIE Yumin, ZHANG Langwen, YU Xiaoyuan, etc. YOLOv5 target detection algorithm based on interaction and fusion of visible light-infrared features [J/OL]. Control theory and application, http://kns.cnki.net/kcms/detail/44.1240.TP.20230511.1643.024.html.

[15] RangiLyu. NanoDet-Plus: Super fast and high accuracy lightweight anchor-free object detection model[EB/OL]. https://github.com/RangiLyu/nanodet, 2021.

[16] MA N, ZHANG X, ZHENG H T, et al. Shufflenet v2: Practical guidelines for efficient cnn architecture design[C]//Proceedings of the European Conference on Computer Vision (ECCV). 2018: 116-131.

[17] LI X, WANG W, WU L, et al. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection[J]. Advances in Neural Information Processing Systems, 2020, 33: 21002-21012.

[18] JIANG Nan, WANG Kuiran, PENG Xiaoke. Anti-UAV: A Large-Scale Benchmark for Vision-Based UAV Tracking[J]. IEEE Transactions on Multimedia, 2023, 25: 486-500, DOI: 10.1109/TMM.2021.3128047.

[19] ZHAO J, WANG G, LI J, et al. The 2nd Anti-UAV workshop & challenge: Methods and results[J/OL]. arXiv preprint arXiv: 2108.09909, 2021.

[20] LI C, LI L, JIANG H, et al. YOLOv6: A single-stage object detection framework for industrial applications[J/OL]. arXiv preprint arXiv: 2209.02976, 2022.

[21] GE Z, LIU S, WANG F, et al. Yolox: Exceeding yolo series in 2021[J/OL]. arXiv preprint arXiv: 2107.08430, 2021.

[22] Github. Yolov5[EB/OL]. https://github.com/ultralytics/yolov5, 2021.

[23] LI B, XIAO C, WANG L, et al. Dense nested attention network for infrared small target detection[J]. IEEE Transactions on Image Processing, 2022, 32: 1745-1758.

-

期刊类型引用(0)

其他类型引用(1)

下载:

下载: