Infrared and Visible Image Fusion of Unmanned Agricultural Machinery Based on PIE and CGAN

-

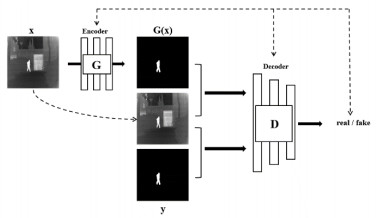

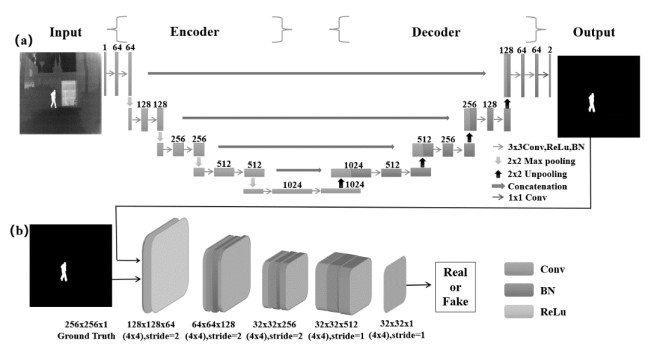

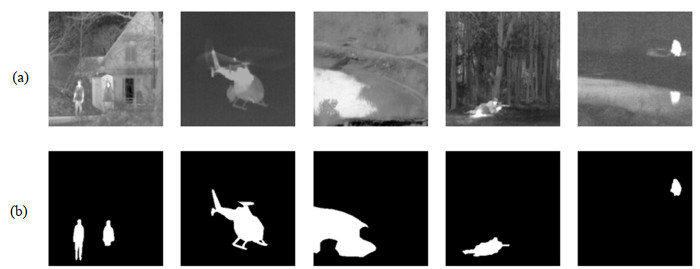

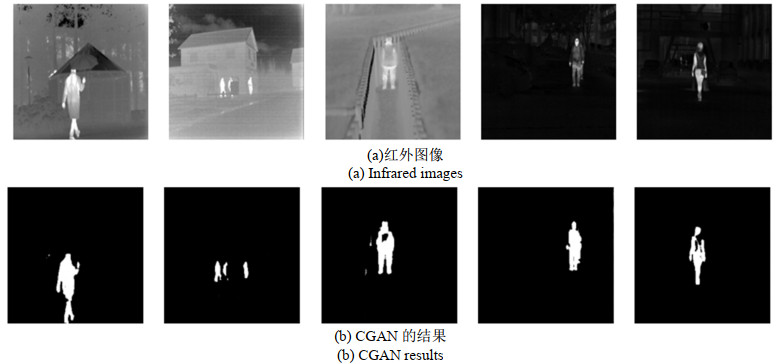

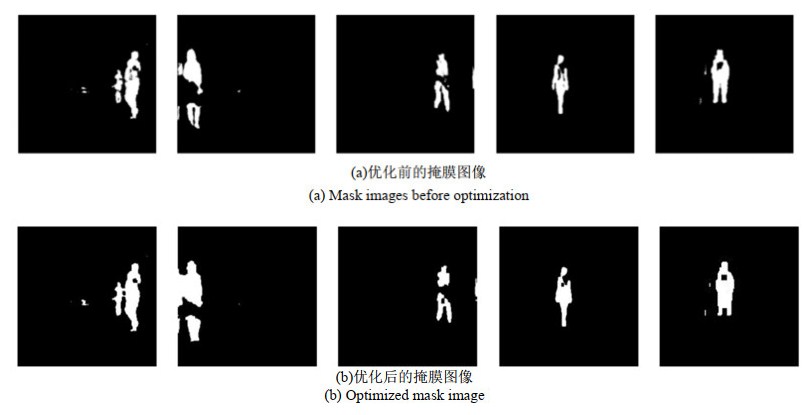

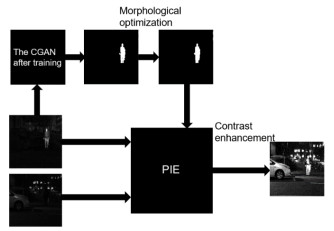

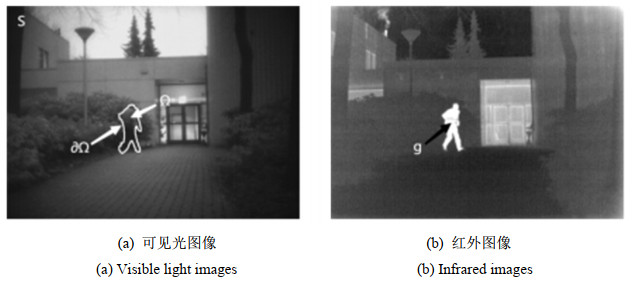

摘要: 为了使无人农机在复杂环境的生产过程中及时感知环境信息,避免安全事故发生,本文提出了一种PIE(Poisson Image Editing)和CGAN(Conditional Generative Adversarial Networks)相结合的红外与可见光图像融合算法。首先,利用红外图像及其对应的红外图像显著区域对CGAN网络进行训练;然后,将红外图像输入训练好的网络,即可得到显著区域掩膜;在对其进行形态学优化后进行基于PIE的图像融合;最后,对融合结果进行增强对比度处理。该算法可以实现快速图像融合,满足无人农机实时感知环境的需求,并且该算法保留了可见光图像的细节信息,又能突出红外图像中行人和动物等重要信息,在标准差、信息熵等客观指标上表现良好。Abstract: In this study, we proposed an infrared and visible image fusion algorithm that combines PIE and CGAN to make unmanned agricultural machinery perceive environmental information promptly and avoid accidents during production in complex environments. First, we trained the CGAN using an infrared image and corresponding saliency regions. The infrared image is input into the trained network to obtain the saliency region mask. After morphological optimization, we performed image fusion based on the PIE. Finally, we enhanced the fusion results by contrast processing. This algorithm can realize fast image fusion and satisfy the requirements for real-time environmental perception of unmanned agricultural machines. In addition, the algorithm retains the details of visible images and highlights important information concerning humans and animals in infrared images. It performs well in standard deviation and information entropy.

-

Keywords:

- infrared image /

- image fusion /

- generative adversarial network

-

-

表 1 图像客观数据对比

Table 1 Comparison of objective data of images

Image Fusion methods SD EN AG Image 1 WA 18.563 6.095 0.022 PCA 40.323 7.149 0.054 WT 20.297 6.262 0.040 Ours 69.133 7.308 0.101 Image 2 WA 15.079 5.097 0.012 PCA 18.987 4.681 0.012 WT 17.711 5.274 0.022 Ours 56.634 6.894 0.045 Image 3 WA 22.342 5.054 0.012 PCA 22.189 5.156 0.019 WT 24.558 5.212 0.022 Ours 55.994 6.775 0.058 Image 4 WA 12.861 4.763 0.013 PCA 18.170 4.431 0.015 WT 16.014 5.015 0.024 Ours 79.514 6.691 0.047 Image 5 WA 23.504 6.018 0.022 PCA 36.432 6.348 0.041 WT 27.516 6.182 0.037 Ours 72.358 7.531 0.068 Image 6 WA 24.221 5.236 0.015 PCA 41.457 5.799 0.037 WT 26.996 5.384 0.027 Ours 53.211 5.992 0.046 Image 7 WA 11.552 4.325 0.010 PCA 17.432 4.611 0.012 WT 14.299 4.547 0.018 Ours 67.119 7.128 0.048 表 2 客观数据SD对比

Table 2 SD comparison of objective data

SD WA PCA WT Ours Average value 17.568 30.442 20.502 66.534 Standard deviation 3.774 8.167 4.191 8.326 表 3 客观数据EN对比

Table 3 Comparison of objective data EN

EN WA PCA WT Ours Average value 4.854 5.664 5.556 6.754 Standard deviation 0.542 0.966 0.582 0.443 表 4 客观数据AG对比

Table 4 Comparison of objective data AG

AG WA PCA WT Ours Average value 0.018 0.031 0.033 0.086 Standard deviation 0.004 0.012 0.007 0.021 -

[1] 郑国伟. 《中国制造2025》简介与相关情况[J]. 中国仪器仪表, 2018(10): 25-28. https://www.cnki.com.cn/Article/CJFDTOTAL-ZYQB201810004.htm ZHENG Guowei. Introduction and related situation of "Made in China 2025" [J]. Chinese Instrumentation, 2018(10): 25-28. https://www.cnki.com.cn/Article/CJFDTOTAL-ZYQB201810004.htm

[2] 安影. 基于多尺度分解的红外与可见光图像融合算法研究[D]. 西安: 西北大学, 2020. Doi: 10.27405/d.cnki.gxbdu.2020.000953. Anying. Study on infrared and visible light image fusion algorithms based on multi -scale decomposition[D]. Xi'an: Northwest University, 2020. Doi: 10.27405/d.cnki.gxbdu.2020.000953.

[3] CHEN Jun, LI Xuejiao, LUO Linbo, et al. Infrared and visible image fusion based on target-enhanced multiscale transform decomposition[J]. Information Sciences, 2020, 508: 64-78. DOI: 10.1016/j.ins.2019.08.066

[4] LI G, LIN Y, QU X. An infrared and visible image fusion method based on multi-scale transformation and norm optimization[J]. Information Fusion, 2021, 71(2): 109-129.

[5] ZHANG S, LI X, ZHANG X, et al. Infrared and visible image fusion based on saliency detection and two-scale transform decomposition[J]. Infrared Physics & Technology, 2021, 114(3): 103626.

[6] 王海宁, 廖育荣, 林存宝, 等. 基于改进生成对抗网络模型的红外与可见光图像融合[J/OL]. 电讯技术, [2022-06-08]. http://kns.cnki.net/kcms/detail/51.1267.tn.20220509.1228.004.html. WANG Haining, LIAO Yurong, LIN Cunbao, et al. Based on the integration of infrared and visible light images that are improved to generate network models [J/OL]. Telecommunications Technology, [2022-06-08]. http://kns.cnki.net/kcms/detail/51.1267.tn.20220509.1228.004.html.

[7] 孙佳敏, 宋慧慧. 基于DWT和生成对抗网络的高光谱多光谱图像融合[J]. 无线电工程, 2021, 51(12): 1434-1441. https://www.cnki.com.cn/Article/CJFDTOTAL-WXDG202112008.htm SUN Jiamin, SONG Huihui. Hyperspectral multispectral image fusion based on DWT and generative adversarial network[J]. Radio Engineering, 2021, 51(12): 1434-1441. https://www.cnki.com.cn/Article/CJFDTOTAL-WXDG202112008.htm

[8] 武圆圆, 王志社, 王君尧, 等. 红外与可见光图像注意力生成对抗融合方法研究[J]. 红外技术, 2022, 44(2): 170-178. http://hwjs.nvir.cn/article/id/7f2ae6e4-af9c-4929-a689-cb053b4dda85 WU Yuanyuan, WANG Zhishe, WANG Junyao, et al. Infrared and visible light image attention generating confrontation fusion methods [J]. Infrared Technology, 2022, 44(2): 170-178. http://hwjs.nvir.cn/article/id/7f2ae6e4-af9c-4929-a689-cb053b4dda85

[9] Hussain K F, Mahmoud R. Efficient poisson image editing[J]. Electronic Letters on Computer Vision and Image Analysis, 2015, 14(2): 45-57.

[10] Chandani P, Nayak S. Generative adversarial networks: an overview[J]. Journal of Critical Reviews, 2020, 7(3): 753-758.

[11] MOON S. ReLU network with bounded width is a universal approximator in view of an approximate identity[J]. Applied Sciences, 2021, 11(1): 427-427. DOI: 10.3390/app11010427

[12] WU S, LI G, DENG L, et al. L1-norm batch normalization for efficient training of deep neural networks[J]. IEEE Transactions on Neural Networks and Learning Systems, 2019, 30(7): 2043-2051. DOI: 10.1109/TNNLS.2018.2876179

[13] Abdeimotaal H, Abdou A, Omar A, et al. Pix2pix conditional generative adversarial networks for scheimpflug camera color-coded corneal tomography image generation[J]. Translational Vision Science and Technology, 2021, 10(7): 21-21.

[14] 甄媚, 王书朋. 可见光与红外图像自适应加权平均融合方法[J]. 红外技术, 2019, 41(4): 341-346. http://hwjs.nvir.cn/article/id/hwjs201904008 ZHEN Mei, WANG Shupeng. Visible light and infrared images adaptive weighted average fusion method[J]. Infrared Technology, 2019, 41(4): 341-346. http://hwjs.nvir.cn/article/id/hwjs201904008

[15] 张影. 卫星高光谱遥感农作物精细分类研究[D]. 北京: 中国农业科学院, 2021. DOI: 10.27630/d.cnki.gznky.2021.000383. ZHANG Ying. Satellite High Spectrum Remote Sensing Crop Fine Classification Study[D]. Beijing: Chinese Academy of Agricultural Sciences, 2021. Doi: 10.27630/d.cnki.gznky.2021.000383.

[16] 倪钏. 红外与可见光图像融合方法研究[D]. 温州: 温州大学, 2020. Doi: 10.27781/d.cnki.gwzdx.2020.000124. NI Yan. Research on the Fusion Method of Infrared and Visible Light Image[D]. Wenzhou: Wenzhou University, 2020. Doi: 10.27781/d.cnki.gwzdx.2020.000124.

[17] CHEN J, LI X, LUO L, et al. Infrared and visible image fusion based on target-enhanced multiscale transform decomposition[J]. Information Sciences, 2020, 508: 64-78.

[18] 刘娜, 曾小晖. 基于信息熵引导耦合复杂度调节模型的红外图像增强算法[J]. 国外电子测量技术, 2021, 40(12): 37-43. Doi: 10.19652/j.cnki. femt.2102956. LIU Na, ZENG Xiaohui. Based on information entropy guidance coupling complexity adjustment model of infrared image enhancement algorithm [J]. Foreign Electronic Measurement Technology, 2021, 40(12): 37-43. Doi: 10.19652/J.CNKI.FEMT.2102956.

[19] KONG X, LIU L, QIAN Y, et al. Infrared and visible image fusion using structure-transferring fusion method[J]. Infrared Physics & Technology, 2019, 98: 161-173.

[20] 王瑜婧. 显著性检测的红外与可见光图像融合算法研究[D]. 西安: 西安科技大学, 2021. Doi: 10.27397/d.cnki.gxaku.2021.000608. WANG Yujing. Research on Infrared and Visible Light Image Fusion Algorithms of Significant Detection[D]. Xi'an: Xi'an University of Science and Technology, 2021. Doi: 10.27397/d.cnki.gxaku.2021.000608.

-

期刊类型引用(9)

1. 王雁,吕琴红,杨卫,董永谦. 全闭环柔性填网工艺在红外器件组装中的应用. 电子工艺技术. 2024(01): 28-30 .  百度学术

百度学术

2. 朱海勇,曾智江,孙闻,赵振力,范广宇,季鹏,张启,庄馥隆,李雪. 冷光学用大口径2 k×2 k红外探测器组件封装技术. 红外与激光工程. 2024(05): 81-90 .  百度学术

百度学术

3. 李振雷,谢萌,饶启超,韩蓬磊. 分置式斯特林制冷机耦合间隙对探测器性能的影响. 红外. 2024(06): 42-47 .  百度学术

百度学术

4. 江乐果,潘凯,田雯,王一波,赵海兴. 基于高压制冷的光电吊舱降噪优化研究. 红外技术. 2024(11): 1347-1353 .  本站查看

本站查看

5. 张永壮,龚志红,饶启超,卢旭辰,韩蓬磊,温建国,耿利红. 斯特林制冷机驱动器元器件的散热优化设计及试验研究. 红外. 2023(07): 34-38 .  百度学术

百度学术

6. 江晨,丁森,朱鲲捷,胡攀辉,陈风,吴丹,郁佳敏. 高压气瓶结构设计与仿真及试验研究. 机械制造与自动化. 2023(05): 181-184 .  百度学术

百度学术

7. 江晨,陈风,丁森,胡圣鑫,郁佳敏,江春月,吴君辉. 高压制冷气瓶的轻量化设计与分析. 机械制造. 2022(03): 25-26+36 .  百度学术

百度学术

8. 苏永强. 金属C型密封圈在斯特林制冷机中的应用. 红外技术. 2022(07): 757-762 .  本站查看

本站查看

9. 辛存良,程多文,杨坤,傅斌. 聚四氟乙烯基耐磨涂层在制冷机中的试验分析. 低温与超导. 2020(07): 29-32 .  百度学术

百度学术

其他类型引用(1)

下载:

下载: