Aerial Infrared Pedestrian Detection with Saliency Map Fusion

-

摘要:

目标检测是计算机视觉的基本任务之一,无人机搭载红外相机为夜间侦察、监视等提供便利。针对红外航拍场景检测目标小、图像纹理信息少、对比度弱以及红外目标检测中传统算法精度有限,深度算法依赖算力及功耗不友好等问题,提出了一种融合显著图的红外航拍场景行人检测方法。首先,采用U2-Net从原始热红外图像中提取显著图对原始图像进行增强;其次分析了像素级加权融合和图像通道替换融合两种方式的影响;再次,重聚类先验框,以提高算法对航拍目标场景的适应性。实验结果表明:像素级视觉显著性加权融合效果更优,对典型YOLOv3、YOLOv3-tiny和YOLOv4-tiny平均精度分别提升了6.5%、7.6%和6.2%,表明所设计的融合视觉显著性方法的有效性。

Abstract:Object detection is a fundamental task in computer vision. Drones equipped with infrared cameras facilitate nighttime reconnaissance and surveillance. To realize small target detection, slight texture information, weak contrast in infrared aerial photography scenes, limited accuracy of traditional algorithms, and heavy dependence on computing power and power consumption in infrared object detection, a pedestrian detection method for infrared aerial photography scenes that integrates salient images is proposed. First, we use U2-Net to generate saliency maps from the original thermal infrared images for image enhancement. Second, we analyze the impact of two fusion methods, pixel-level weighted fusion, and replacement of image channels as image-enhancement schemes. Finally, to improve the adaptability of the algorithm to the target scene, the prior boxes are reclustered. The experimental results show that pixel-level weighted fusion presents excellent results. This method improves the average accuracy of typical YOLOv3, YOLOv3-tiny, and YOLOv4-tiny algorithms by 6.5%, 7.6%, and 6.2%, respectively, demonstrating the effectiveness of the designed fused visual saliency method.

-

Keywords:

- infrared pedestrian detection /

- salient map /

- image enhancement /

- YOLOv4

-

0. 引言

目标检测是计算机视觉领域的研究热点之一[1]。无人机搭载红外相机进行目标检测在军事侦察[2]、智能交通、智能农业[3]等领域有着广泛应用。然而,主流目标检测算法依赖于白天或其他光照充足场景,在夜间或缺乏光照的弱光场景往往精度不足。红外航拍场景具有视场广、灵活性高等特点,其图像采集通过热成像仪感知环境温度信息,不易受光照影响,在弱光环境下亦可获得较好的成像效果。因此红外航拍目标检测已经成为计算机视觉领域的重点课题之一。

早期的航拍目标检测一般利用手工设计特征[4]方式,但航拍红外图像往往背景复杂、检测目标小、尺度变化大,对传统方法提出更大的挑战。当前,得益于计算机视觉技术的发展,基于深度学习的检测方法成为主流,依据检测流程可分为单阶段(One-Stage)和双阶段(Two-Stage)两类。双阶段目标检测以Faster-RCNN(Region-based Convolutional Neural Networks)[5]系列为代表,一般精度较高。单阶段目标检测以YOLO(You Only Look Once)[1, 6-8]系列和SSD(Single Shot MultiBox Detector)[9]为代表。机载平台往往内存功耗受限、算力不足,单阶段算法一般复杂度更低,运行速度更快,更适合应用于边缘设备,YOLO是其中的典型代表。

对比可见光图像,红外图像对比度低、纹理信息不丰富,尽管面向可见光大数据的深度模型已取得巨大进步,但面向红外图像实现准确而鲁棒的目标检测仍具有很大的挑战[4]。可见光图像纹理信息丰富,但易受光照条件影响,红外成像不受光照影响,因此对红外与可见光图像进行图像融合是一项有益的探索[10],但同步成像或彩色-红外图像配准成本较高。因此仅使用热红外图像实现目标检测[11]成为领域的研究重点之一。

视觉显著性对深入挖掘图像信息具有重要意义。显著性目标检测(Salient Object Detection, SOD)[12]将图像中显著主体目标进行像素级分割,通过颜色、深度等信息与周围环境的差异定位显著性目标,已成功应用于目标识别[13-14]等领域。研究表明,显著图网络获得的语义特征能更好地表达目标不同尺度的上下文信息[15]。但显著图缺乏纹理特征,本文将红外图像显著图与原始红外图像进行融合,以挖掘更多信息。

基于以上分析,针对红外航拍场景特点和需求,本文提出了一种融合视觉显著性的红外航拍行人检测方法。首先以YOLOv4-tiny作为基础网络,针对红外航拍场景数据特点,通过K-Means算法进行先验框重聚类,增加目标检测针对性。进而采用先进的深度显著性目标检测网络U2-Net[15]从热红外图像生成显著性图,与原始热图像进行融合挖掘更多图像信息。并分析基于像素级加权融合和通道替换融合两种方法对航拍红外目标检测的影响,最后实验验证了本文方法的有效性。为其他红外目标检测任务提供了一种可行的研究思路和丰富的实验参考。

1. 相关工作

1.1 目标检测

在过去20年中,可见光图像是目标检测的主要对象。传统目标检测主要通过手工提取特征的方式,例如方向梯度直方图(Histogram of Oriented Gradient, HOG)[4],但面向复杂场景精度有限。

近年来,基于深度学习的目标检测取得了重大突破,主要分为双阶段目标检测和单阶段目标检测。前者以R-CNN[5]系列为代表,该类算法准确率较高,但计算成本高,一般不适用于无人机机载航拍目标检测。

单阶段算法最具代表性的是YOLO系列算法[9],YOLO基于回归思想,直接在输出层回归边界框(Bounding Box)的位置和所属类别,速度上更占优势[6]。YOLOv2[7]借鉴了Faster RCNN的锚框(anchor)机制,使得速度和精度上升了一个台阶。YOLOv3[8]引入Darknet-53特征提取网络与多尺度检测,使用逻辑回归代替了Softmax,检测效果达到了当时业界的领先水平。Alexey[1]等对YOLOv3的网络结构、数据预处理等进行优化提出YOLOv4,实现了检测速度和精度的再一次突破。其中YOLOv4-tiny是面向边缘计算优化的检测模型。

1.2 红外目标检测

红外成像技术具有穿透性强、不受光照影响等特点,能克服光照变化对检测任务的影响。但红外图像仍然存在一定的缺点:纹理少、对比度弱。传统的红外航拍目标检测主要基于手工设计特征。刘若阳等[16]提出了一种基于局部协方差矩阵判别模型来克服红外小目标检测中的结构化边缘、非结构化杂波和噪声等问题。袁明等[17]通过中值滤波去噪,然后使用形态学特征抑制背景,最后构造局部对比度通过阈值分割目标。一些学者将红外图像与可见光图像进行融合。Li等[10]提出了光照感知模块,依据光照条件进行可见光与红外图像融合,实现多光谱行人检测。Chen等[18]在SSD架构末端增加解码器模块产生多尺度特征,并引入注意力模块实现红外行人检测器检测性能的提高。

可见光与红外图像融合往往需要同步成像,成本较高。针对仅使用红外图像的航拍目标检测,代牮等[19]通过增加注意力、改进损失函数与预选框筛选改进YOLOv5,实现复杂背景的红外弱小目标检测。本文仅采用红外图像进行目标检测,通过挖掘显著性信息提升算法精度。

1.3 基于视觉显著性的目标检测

显著性目标检测在图像中进行像素级分割[14, 20],是计算机视觉领域的研究热点之一。显著性可理解为一种注意力机制,将显著性图像与热红外图像进行融合能够突出场景中显著性目标[14]。赵鹏鹏等[21]利用融合局部熵对红外弱小目标进行粗定位,再利用改进的视觉显著性检测方法获得感兴趣区域的显著图,最后利用阈值分割提取红外弱小目标。赵兴科等[14]采用BASNet生成热红外图像的显著图进行图像增强,并基于轻量化网络YOLOv3-MobileNetv2实现机载红外目标检测。本文使用深度显著性目标检测网络U2-Net[15]生成热红外图像的显著性图,并在第3章对结果进行评估。

2. 本文算法

针对红外航拍图像存在的检测目标小、图像纹理少、对比度弱的问题,本文主要研究融合视觉显著性的红外航拍行人检测。本章首先分析YOLOv4基准模型,然后分析针对红外航拍场景的锚框重聚类,最后重点介绍生成热红外图像显著图网络U2-Net以及两种显著图融合方式。

2.1 YOLOv4目标检测算法

YOLOv4基于YOLOv3,分别在网络的输入端、主干网络、颈部网络、输出端引入先进方法进行改进,在检测精度上显著提升,实现速度和精度的平衡。

1)输入端:采用Mosaic、CutMix等数据增强方式进行预处理,提高训练速度和检测精度。采用自抗扰训练和CmBN(Cross-Iteration mini-Batch Normalizartion)提升网络泛化性。

2)主干网络:提出基于密集连接结构和CSP(Cross Stage Partial)的新主干CSPDarknet53来提取特征。

3)颈部网络:用于多尺度特征提取。采用SPP(Spatial Pyramid Pooling)和PANet(Path Aggregation Network)结合的方式替代之前的特征金字塔(Feature Pyramid Network, FPN))作为特征融合网络。能够在仅增加少量推理时间的同时显著提升检测精度。

4)输出端:又称检测头,输出最终检测结果。YOLO的输出主要分为3部分:边界框位置信息、边界框置信度、目标分类信息。YOLOv4采用CIOU(Complete-IOU))损失函数,并在预选框筛选阶段采用DIOU_NMS代替原始NMS,提升检测精度。

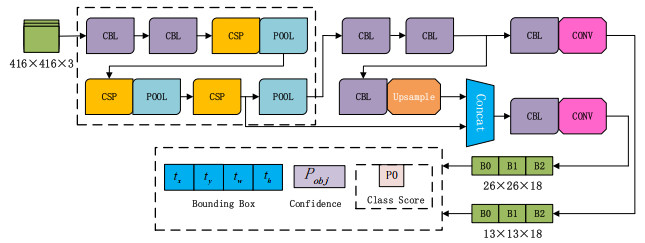

YOLOv4-tiny是通过减少YOLOv4主干和颈部中CSP结构、去掉SPP结构、减少输出头优化设计的轻量级网络。针对单类别行人检测,本文采用的YOLOv4-tiny结构如图 1所示。

2.2 基于K-Means的锚框重聚类

自YOLOv2开始,YOLO将锚框作为先验知识加入到边界框回归中,YOLOv4边界框回归公式如下:

$$ \begin{array}{l} {b_x} = \sigma ({t_x}) + {c_x} \hfill \\ {b_y} = \sigma ({t_y}) + {c_y} \hfill \\ {b_w} = {p_w}{{\text{e}}^{{t_w}}} \hfill \\ {b_h} = {p_h}{{\text{e}}^{{t_h}}} \hfill \\ \end{array} $$ (1) 式中:bx、by是边界框的中心点位置坐标;bw、bh是边界框的宽、高。如图 1所示,YOLO通过网络训练获得边界框参数。其中tx,ty是边界框相对特征图网络单元格左上角坐标的偏移量;cx、cy是特征图网络单元格左上角坐标;tw、th是相对锚框的缩放比例;pw、ph是从数据集中聚类的锚框。

高质量的锚框设计可提升检测效果[20]。YOLOv4-tiny中原始锚框尺寸适用于尺度变化较大的场景,针对航拍场景,需要对锚框大小重新设计。锚框聚类采用K-Means算法,并采用交并比(Intersection of Union, IOU)度量[8],公式为:

$$ d(\text { box }, \text { anchor })=1-\operatorname{IOU}(\text { box, anchor })$$ (2) 2.3 U2-Net生成显著图

无人机航拍行人目标与地面视角的行人目标存在一定差异,由于拍摄角度不同,航拍图像中的行人目标边界几何特性相对较弱。U2-Net[15]是针对显著性目标检测设计的先进网络,能够捕捉不同尺度上下文信息,可有效提取图像中的显著性目标。

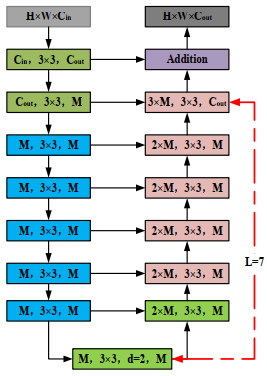

U2-Net[15]是基于encoder-decoder结构设计两层嵌套U形网络,整体结构如图 2所示。底层设计了RSU(residual U-block)结构,顶层设计了“U”形网络,整体由不同层数的RSU模块所组成,这种嵌套U结构在提取多尺度特征上表现良好,同时在聚合阶段的多层次特征上也有较好的表现,且不需要预训练网络进行迁移学习,灵活性强。另外,加深模型U2-Net可获得更高的分辨率,且不会显著增加内存和计算成本。

U2-Net中使用了一种新的残差子块RSU模块(residual U-block),结构如图 3所示。RSU模块中,L表示编码器中的层数,M表示RSU中间层的通道数,输入通道数与输出通道数分别用Cin与Cout表示。编码器中的层数越大,对大尺度信息的处理能力越强。RSU结构也是一个小型的U型结构,能实现不同尺度感受野特征信息的融合,提升网络性能。

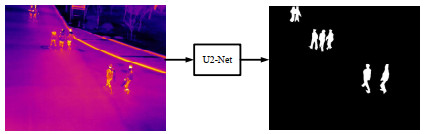

利用U2-Net生成显著图实例如图 4所示,在多种复杂场景下,显著图均能提取出行人目标,因此,可利用显著图融合热红外图像进行图像增强。

2.4 显著图融合增强热红外图像

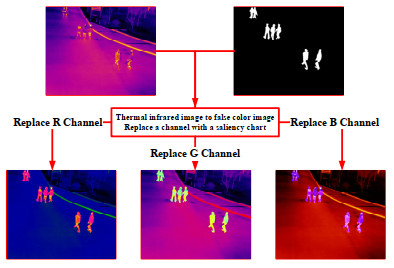

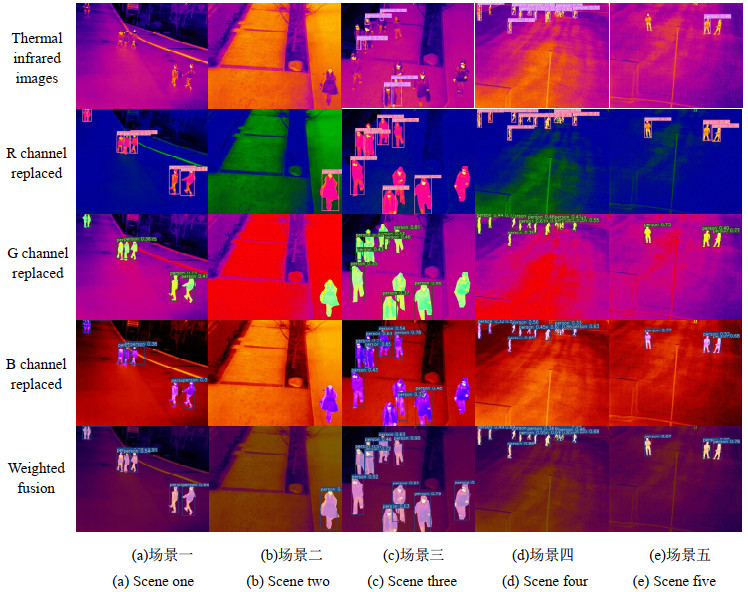

红外航拍数据集ComNet[22]将单通道的热红外图像转换为RGB格式的三通道伪彩色图像,转换方式依据冷暖色调平滑渐变。通过U2-Net获得显著图后,采用两类图像融合方案。

方案一通道替换:如图 5所示,对三通道伪彩色图像进行通道替换,依次使用显著图替换3个通道生成新的融合图像。

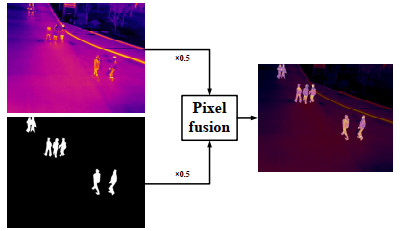

方案二加权融合:将显著图与转化后的伪彩色图像进行加权融合,得到增强图像,如图 6所示。加权融合不仅保留了图像中的纹理信息,且使得图像中的行人目标区域更加显著。

3. 实验

3.1 数据集与评价指标

实验使用ComNet数据集[22],该数据集由大疆无人机DJIM600 PRO搭载FLIR热红外相机Vue Pro采集不同时期不同场景下的行人热红外图像数据,无人机飞行的高度为20~40 m,图像分辨率为640×512。部分实例如图 7所示。

该数据集包含多个场景,例如运动场、校园、中午行人密集的食堂入口以及夜间行人稀少的走廊。为了保证模型的有效性,训练集和测试集使用了不同场景下采集的热图像。从数据集中提取仅包含行人目标的热红外图像,训练集包括819张图像,其中包括3130个行人实例,测试集包括80张图像,其中包括407个行人实例[22]。

为对行人目标的检测结果进行评估,本文使用评价指标包括:精确率(Precision)、召回率(Recall)和平均精度(Average Precision, AP)评价指标。AP50表示设置IOU阈值为0.5时,类别的AP值[14]。

3.2 实验环境

实验平台操作系统为Windows10,CPU为Intel i7-8750H(2.2 GHz),GPU为NVIDIA GTX1060GPU、16G内存。采用PyTorch深度学习框架,开发环境为Python 3.8.0,CUDA11.1。

设置训练次数为100 epoch,设置输入网络的图片大小为416×416,学习率为0.001,batch_size为8。

3.3 实验结果与分析

3.3.1 K-Means锚框重聚类

为提高检测精度,利用K-Means算法对数据集的锚框重新聚类,得到6组锚框数据,如表 1所示。重聚类的anchor与数据集的平均IOU较原始anchor提高了32.76%。基于融合显著图和YOLOv4-tiny,经过anchor聚类后的模型精度增加了0.3%,说明精确的锚框设计,能有效提高检测精度。

表 1 K-Means锚框聚类结果Table 1. The results of anchor box re-clusteredModels Anchor Box IOU/% YOLOv4-tiny (10, 14) (23, 27) (37, 58)

(81, 82) (135, 169) (344, 319)49.32% Ours (14, 39) (21, 69) (26, 95)

(38, 85) (33, 113) (45, 130)82.08% 3.3.2 U2-Net显著图融合分析

文献[14]中证明了BASNet作为显著图提取网络的有效性。类似地,U2-Net对热红外图像进行处理,提取出行人目标,并将其处理为二值掩膜图像的形式输出。为评估U2-Net显著图提取的有效性,为后续图像融合方式研究提供有力支撑,选择典型YOLOv3和YOLOv4-tiny算法为基础,将U2-Net和BASNet生成的显著图进行不同数据融合方式对比。

如表 2所示,对比BASNet和U2-Net提取的显著图在不同数据融合方式上效果,无论基于YOLOv3还是YOLOv4-tiny,U2-Net提取的显著图效果明显优于BASNet,能挖掘更多上下文信息。

Data fusion method Salient map extraction method AP50/% YOLOv3 YOLOv4-tiny Original infrared image - 88.8 88.6 Salient map - 77.1 75.6 R channel replaced BASNet 92.7 91.7 U2-Net 94.7 93.7 G channel replaced BASNet 93.8 91.1 U2-Net 94.2 94.7 B channel replaced BASNet 90.5 91.5 U2-Net 92.4 92.9 Weighted fusion BASNet 94.4 92.0 U2-Net 95.3 94.8 如表 2所示,YOLOv4-tiny在原始热红外数据集的AP值为88.6%,在显著图数据上AP值为75.6%,表明显著图图像具有一定的有效信息,但U2-Net提取的显著图仅为二值掩膜图像,原始红外图像比显著图图像具有更多的纹理信息。通道替换的数据融合方式都可提高行人检测精度,替换R、G、B通道分别比基准模型AP值增加了5.1%,6.1%和4.3%。显然面向低对比度的红外图像,替换显著图通道的方式能够增加图像对比度,提升检测效果。同样,加权融合的方式AP值增加了6.2%,即加权融合方式效果优于通道替换,说明加权融合可使用更多的信息。表明了融合热红外图像与显著图的数据增强方法对行人目标检测的有效性,通过加权融合方式进行数据融合比通道替换数据融合表现更好。

图 8是基于YOLOv4-tiny采用不同融合方式的推理实例。显然,使用热红外图像与显著图融合后的图像能够明显增强目标显著性。针对多个场景的行人目标检测,增强后的图像比单独使用热红外图像效果更好,原热红外图像中存在明显漏检,融合显著图进行增强后,行人漏检情况明显改善。同时,不同的通道替换方式与融合方式对行人目标检测结果的影响也不相同,通过替换G通道进行图像融合在目标置信度上优于其他通道替换融合方式。图 8中,通过加权融合方式检测行人目标比通道替换检测目标框更准确,置信度更高,例如在目标较多的场景(图 8(c))中,加权融合方式可检测出右下角的两个行人目标,明显改善漏检情况。

3.3.3 与先进目标检测器的对比

为进一步验证本文图像显著图加权融合方式的有效性,将本文融合算法与IRA-YOLOv3[23]、赵兴科等[14]提出的方法、YOLOv3等几种先进红外目标检测算法在ComNet数据集[22]的表现以及模型体积进行对比,实验结果如表 3所示。

由表 3可见,本文方法在精度上相比于IRA-YOLOv3[23]和赵兴科等[14]提出的方法分别高10.9%、6.0%。在模型体积的对比上,本文方法相比于IRA-YOLOv3多12.4MB,但精度上明显占优。且对YOLOv3、YOLOv3-tiny和YOLOv4-tiny等典型算法,通过显著图加权融合方式进行数据增强相比于原始算法在精度上分别提升了6.5%、7.6%和6.2%,表明本文所设计融合方法的有效性。说明加权融合进行热红外图像数据增强的方式在面向红外航拍场景数据是有效的,对其他场景具有一定的参考意义。

YOLOv4-tiny相比之前的YOLOv3、YOLOv3-tiny算法在运行速度上占优[1]。相比YOLOv4-tiny算法在热红外图像的表现,在显著图加权融合后的AP值增加了8.8%。可获得更有利于边缘设备部署的检测模型。

4. 结束语

综上所述,为了解决红外航拍图像存在目标尺寸小、纹理信息少、图像对比度弱的问题,本文提出了一种融合视觉显著性的红外航拍场景行人检测方法。该算法以YOLOv4-tiny作为基础,通过重聚类锚框的方式使得检测器获得更好的效果,并利用U2-Net网络提取热红外图像显著图,通过像素级加权融合方式对热红外图像进行数据增强。基于公开航拍数据集,实验验证了本文方法能够有效提升多种典型检测算法的精度。

-

表 1 K-Means锚框聚类结果

Table 1 The results of anchor box re-clustered

Models Anchor Box IOU/% YOLOv4-tiny (10, 14) (23, 27) (37, 58)

(81, 82) (135, 169) (344, 319)49.32% Ours (14, 39) (21, 69) (26, 95)

(38, 85) (33, 113) (45, 130)82.08% Data fusion method Salient map extraction method AP50/% YOLOv3 YOLOv4-tiny Original infrared image - 88.8 88.6 Salient map - 77.1 75.6 R channel replaced BASNet 92.7 91.7 U2-Net 94.7 93.7 G channel replaced BASNet 93.8 91.1 U2-Net 94.2 94.7 B channel replaced BASNet 90.5 91.5 U2-Net 92.4 92.9 Weighted fusion BASNet 94.4 92.0 U2-Net 95.3 94.8 -

[1] Bochkovskiy Alexey, WANG Chienyao, LIAO Hongyuan. YOLOv4: Optimal speed and accuracy of object detection[EB/OL]. [2020-8-28]. https://arxiv.org/abs/2004.10934.

[2] 顾佼佼, 李炳臻, 刘克, 等. 基于改进Faster R-CNN的红外舰船目标检测算法[J]. 红外技术, 2021, 43(2): 170-178. http://hwjs.nvir.cn/article/id/6dc47229-7cdb-4d62-ae05-6b6909db45b9 GU J J, LI B Z, LIU K, et al. Infrared ship target detection algorithm based on improved faster R-CNN[J]. Infrared Technology, 2021, 43(2): 170-178. http://hwjs.nvir.cn/article/id/6dc47229-7cdb-4d62-ae05-6b6909db45b9

[3] 杨蜀秦, 刘江川, 徐可可, 等. 基于改进CenterNet的玉米雄蕊无人机遥感图像识别[J]. 农业机械学报, 2021, 52(9): 206-212. YANG S Q, LIU J C, XU K K, et al. Remote sensing image recognition of corn stamens based on improved CenterNet for unmanned aerial vehicles[J]. Transactions of the Chinese Society for Agricultural Machinery, 2021, 52(9): 206-212.

[4] Miezianko Roland, Pokrajac Dragoljub. People detection in low resolution infrared videos [C]//Proc of the 2008 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, 2008: 1-6.

[5] REN Shaoqing, HE Kaiming, Girshick Ross, et al. Faster R-CNN: towards real-time object detection with region proposal networks [J]. IEEE Trans on Pattern Analysis, 2016, 39(6): 1137-1149.

[6] Redmon Joseph, Divvala Santosh, Girshick Ross, et al. You only look once: unified, real-time object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016: 779-788.

[7] Redmon Joseph, Farhadi Ali. YOLO9000: better, faster, stronger[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017: 7263-7271.

[8] Redmon Joseph, Farhadi Ali. Yolov3: An incremental improvement[EB/OL]. [2018-04-08], https://arxiv.org/abs/1804.02767v1.

[9] LIU Wei, Anguelov Dragomir, Erhan Dumitru, et al. SSD: Single shot multibox detector[C]// Proceedings of the European Conference on Computer Vision, 2017: 21-37.

[10] LI Chengyang, SONG Dan, TONG Ruofeng, et al. Illumination-aware faster R-CNN for robust multispectral pedestrian detection[J]. Pattern Recognition, 2019, 85: 161-171. DOI: 10.1016/j.patcog.2018.08.005

[11] 仇国庆, 杨海静, 王艳涛, 等. 基于视觉特征融合的机载红外弱小目标检测[J]. 激光与光电子学进展, 2020, 57(18): 79-86. QIU G Q, YANG H J, WANG Y T, et al. Airborne infrared dim small target detection based on visual feature fusion[J]. Laser & Optoelectronics Progress, 2020, 57(18): 79-86.

[12] 李婉蓉, 徐丹, 史金龙, 等. 显著性物体检测研究综述: 方法、应用和趋势[J/OL]. 计算机应用研究, https://doi.org/10.19734/j.issn.1001-3695.2021.12.0645. LI W R, XU D, SHI J L, et al. Review of salient object detection research: methods, applications and trends[J/OL]. Computer Application Research, https://doi.org/10.19734/j.issn.1001-3695.2021.12.0645.

[13] LIU Yixiu, ZHANG Yunzhou, Coleman Sonya, et al. A new patch selection method based on parsing and saliency detection for person re-identification[J]. Neurocomputing, 2020, 374: 86-99. DOI: 10.1016/j.neucom.2019.09.073

[14] 赵兴科, 李明磊, 张弓, 等. 基于显著图融合的无人机载热红外图像目标检测方法[J]. 自动化学报, 2021, 47(9): 2120-2131. ZHAO X K, LI M L, ZHANG G, et al. Object detection method based on saliency map fusion for UAV-borne thermal images[J]. Acta Automatica Sinice, 2021, 47(9): 2120-2131.

[15] QIN Xuebin, ZHANG Zichen, HUANG Chenyang, et al. U2-Net: Going deeper with nested U-structure for salient object detection[J]. Pattern Recognition, 2020, 106: 107404. DOI: 10.1016/j.patcog.2020.107404

[16] 刘若阳, 艾斯卡尔·艾木都拉. 基于局部协方差矩阵判别模型的红外小目标检测方法[J]. 激光与红外, 2020, 50(6): 761-768. DOI: 10.3969/j.issn.1001-5078.2020.06.019 LIU R Y, Aiskar Aimudu. Infrared small target detection method based on local covariance matrix discriminant model[J]. Laser & Infrared, 2020, 50(6): 761-768. DOI: 10.3969/j.issn.1001-5078.2020.06.019

[17] 袁明, 宋延嵩, 张梓祺, 等. 基于增强局部对比度的红外弱小目标检测方法[J]. 激光与光电子学进展, https://kns.cnki.net/kcms/detail/31.1690.tn.20220524.1403.002.html. YUAN M, SONG Y S, ZHANG Z Q, et al. Infrared small target detection method based on enhanced local contrast[J]. Laser and Optoelectronics Progress, https://kns.cnki.net/kcms/detail/31.1690.tn.20220524.1403.002.html.

[18] CHEN Yunfan, Hyunchul Shin. Pedestrian detection at night in infrared images using an attention-guided encoder-decoder convolutional neural network [J]. Applied Sciences, 2020, 10(3): 809. DOI: 10.3390/app10030809

[19] 代牮, 赵旭, 李连鹏, 等. 基于改进YOLOv5的复杂背景红外弱小目标检测算法[J]. 红外技术, 2022, 44(5): 504-512. http://hwjs.nvir.cn/article/id/f71aa5f4-92b0-4570-9056-c2abd5506021 DAI J, ZHAO X, LI L P, et al. Infrared small target detection algorithm in complex background based on improved YOLOv5[J]. Infrared Technology, 2022, 44(5): 504-512. http://hwjs.nvir.cn/article/id/f71aa5f4-92b0-4570-9056-c2abd5506021

[20] 罗会兰, 陈鸿坤. 基于深度学习的目标检测研究综述[J]. 电子学报, 2020, 48(6): 1230-1239. LUO H L, CHEN H K. A review of object detection based on deep learning[J]. Chinese Journal of Electronics, 2020, 48(6): 1230-1239.

[21] 赵鹏鹏, 李庶中, 李迅, 等. 融合视觉显著性和局部熵的红外弱小目标检测[J]. 中国光学, 2022, 15(2): 267-275. ZHAO P P, LI S Z, LI X, et al. Infrared weak and small target detection combining visual saliency and local entropy[J]. China Optics, 2022, 15(2): 267-275.

[22] LI Minglei, ZHAO Xingke, LI Jiasong, et al. ComNet: combinational neural network for object detection in UAV-Borne thermal images [J]. IEEE Trans on Geoscience and Remote Sensing, 2021, 59(8): 6662-6673. DOI: 10.1109/TGRS.2020.3029945

[23] SHAO Yanhua, ZHANG Xingping, CHU Hongyu, et al. AIR-YOLOv3: aerial infrared pedestrian detection via an improved YOLOv3 with network pruning[J]. Applied Sciences, 2022, 12(7): 3627. DOI: 10.3390/app12073627

下载:

下载: