Fusion Method for Polarization Direction Image Based on Double-branch Antagonism Network

-

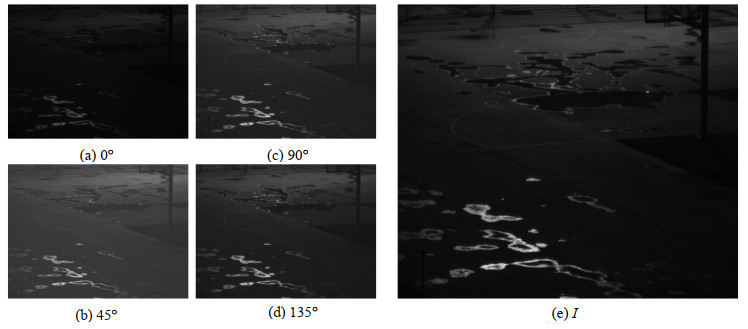

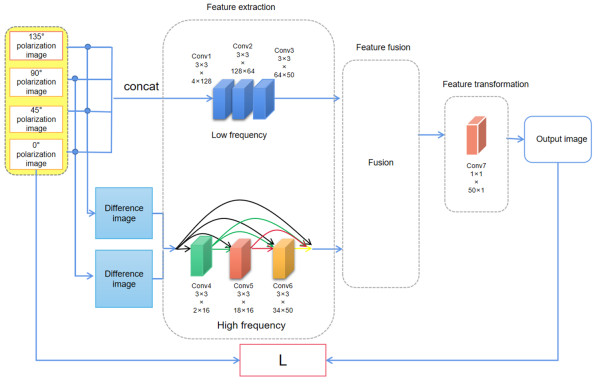

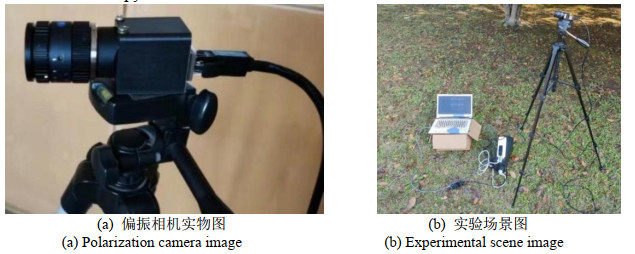

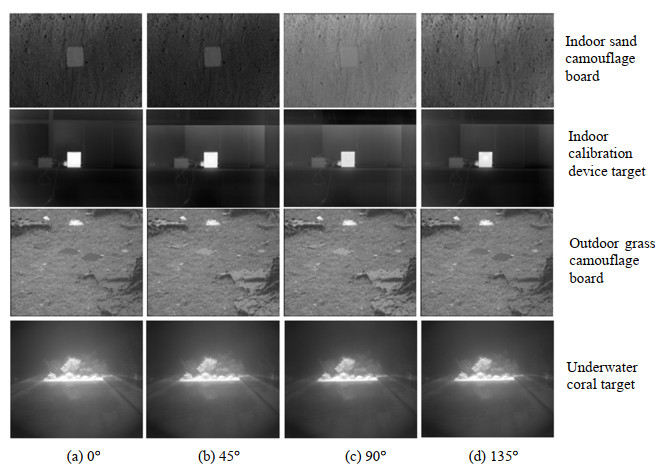

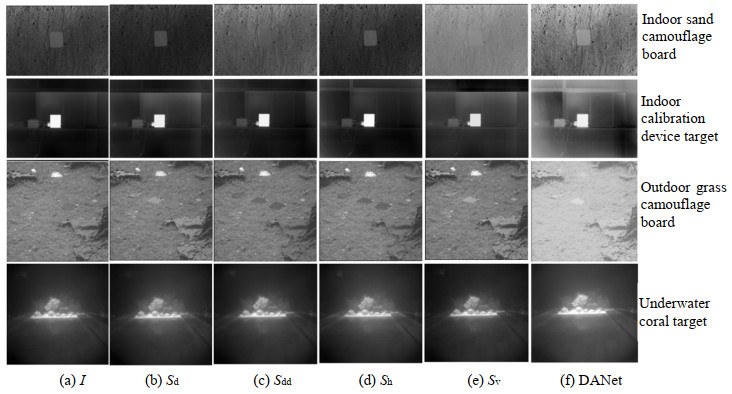

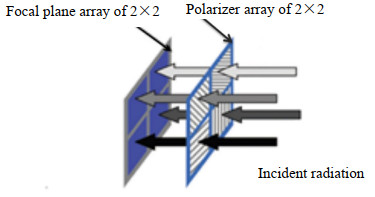

摘要: 为了提升偏振方向图像融合效果,构建了一种偏振方向图像的双支路拮抗融合网络(Double-branch Antagonism Network, DANet),该网络主要包括特征提取、特征融合和特征转化3个模块。首先,特征提取模块由低频支路和高频支路组成,将0°、45°、90°和135°偏振方向图像连接输入到低频支路,提取图像能量特征,将2组拮抗图像差分输入到高频支路,提取图像细节特征;其次,将得到的能量特征和细节特征进行特征融合;最后,将融合后的特征转化整合为融合图像。实验表明,通过此网络得到的融合图像,其视觉效果和评价指标均有较为显著的提升,与合成强度图像I、偏振拮抗图像Sd、Sdd、Sh、Sv相比,在平均梯度、信息熵、空间频率和图像灰度均值上,分别至少提升了22.16%、9.23%、23.44%和38.71%。Abstract: To improve the quality of the fused image, the study presents a double-branch antagonism network (DANet) for the polarization direction images. The network includes three main modules: feature extraction, fusion, and transformation. First, the feature extraction module incorporates low and high-frequency branches, and the polarization direction images of 0°, 45°, 90°, and 135° are concatenated and imported to the low-frequency branch to extract energy features. Two sets of polarization antagonism images (0°, 90°, 45°, and 135°) are subtracted and entered into the high-frequency branch to extract detailed features and energy. Detailed features are fused to feature maps. Finally, the feature maps were transformed into fused images. Experiment results show that the fusion images obtained by DANet make obvious progress in visual effects and evaluation metrics, compared with the composite intensity image I, polarization antagonistic image Sd, Sdd, Sh, and Sv, the average gradient, information entropy, spatial frequency, and mean gray value of the image are increased by at least 22.16%, 9.23%, 23.44% and 38.71%, respectively.

-

Keywords:

- image fusion /

- deep learning /

- polarization image

-

-

表 1 网络参数

Table 1 Network parameters

Layer Input channel Output channel Feature extraction Low frequency Conv1 4 128 Conv2 128 64 Conv3 64 50 High frequency Conv4 2 16 Conv5 18 16 Conv6 34 50 Feature fusion Fusion 50 50 Feature transformation Conv7 50 1 表 2 训练参数

Table 2 Training parameters

Parameters Values Training set 8388 Testing set 932 Training round 20 Epoch 4 Optimizer Adam Activation function ReLU Initial learning rate 1e-4 Learning rate decay rate 0.5*lr/4 round 表 3 输出结果的各项评价指标

Table 3 Evaluation indexes of the output results

I Sd Sdd Sh Sv DANet AG 0.0099 0.0128 0.0119 0.0144 0.0126 0.0185 IE 6.06 6.18 6.08 6.15 6.39 7.04 SF 0.35 0.49 0.40 0.46 0.45 0.64 IM 41 49 47 46 57 93 -

[1] 周强国, 黄志明, 周炜, 等. 偏振成像技术的研究进展及应用[J]. 红外技术, 2021, 43(9): 817-828. http://hwjs.nvir.cn/article/id/76230e4e-2d34-4b1e-be97-88c5023050c6 ZHOU Qiangguo, HUANG Zhiming, ZHOU Wei, et al. Research progress and application of polarization imaging technology[J]. Infrared Technology, 2021, 43(9): 817-828. http://hwjs.nvir.cn/article/id/76230e4e-2d34-4b1e-be97-88c5023050c6

[2] HU H, ZHANG Y, LI X, et al. Polarimetric underwater image recovery via deep learning[J]. Optics and Lasers in Engineering, 2020, 133: 106152. DOI: 10.1016/j.optlaseng.2020.106152

[3] WEI Y, HAN P, LIU F, et al. Enhancement of underwater vision by fully exploiting the polarization information from the Stokes vector[J]. Optics Express, 2021, 29(14): 22275-22287. DOI: 10.1364/OE.433072

[4] DING X, WANG Y, FU X. Multi-polarization fusion generative adversarial networks for clear underwater imaging [J]. Optics and Lasers in Engineering, 2022, 152: 106971. DOI: 10.1016/j.optlaseng.2022.106971

[5] 寻华生, 张晶晶, 刘晓, 等. 基于偏振图像的低照度场景多目标检测算法[J]. 红外技术, 2022, 44(5): 483-491. http://hwjs.nvir.cn/article/id/dfa63fae-27cf-42e6-96a3-5cfb8160c0a6 XUN Huasheng, ZHANG Jingjing, LIU Xiao, et al. Multi-object detection algorithm for low-illumination scene based on polarized image[J]. Infrared Technology, 2022, 44(5): 483-491. http://hwjs.nvir.cn/article/id/dfa63fae-27cf-42e6-96a3-5cfb8160c0a6

[6] SHEN J, WANG H, CHEN Z, et al. Polarization calculation and underwater target detection inspired by biological visual imaging[J]. Sensors & Transducers, 2014, 169(4): 33.

[7] Otter D W, Medina J R, Kalita J K. A survey of the usages of deep learning for natural language processing[J]. IEEE Transactions on Neural Networks and Learning Systems, 2020, 32(2): 604-624.

[8] Nassif A B, Shahin I, Attili I, et al. Speech recognition using deep neural networks: A systematic review[J]. IEEE Access, 2019, 7: 19143-19165. DOI: 10.1109/ACCESS.2019.2896880

[9] JIAO L, ZHAO J. A survey on the new generation of deep learning in image processing[J]. IEEE Access, 2019, 7: 172231-172263. DOI: 10.1109/ACCESS.2019.2956508

[10] LI H, WUX J. DenseFuse: A fusion approach to infrared and visible images [J]. IEEE Transactions on Image Processing, 2018, 28(5): 2614-2623.

[11] 王霞, 赵家碧, 孙晶, 等. 偏振图像融合技术综述[J]. 航天返回与遥感, 2021, 42(6): 9-21. https://www.cnki.com.cn/Article/CJFDTOTAL-HFYG202106003.htm WANG Xia, ZHAO Jiabi, SUN Jing, et al. A review of polarized image fusion techniques[J]. Space Return and Remote Sensing, 2021, 42(6): 9-21. https://www.cnki.com.cn/Article/CJFDTOTAL-HFYG202106003.htm

[12] Tyo J S, Rowe M P, Pugh E N, et al. Target detection in optically scattering media by polarization-difference imaging[J]. Applied Optics, 1996, 35(11): 1855-1870. DOI: 10.1364/AO.35.001855

[13] HUANG G, LIU Z, Van Der Maaten L, et al. Densely connected convolutional networks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017: 4700-4708.

[14] SHEN Yu, WU Zhongdong, WANG Xiaopeng, et al. Tetrolet transform images fusion algorithm based on fuzzy operator[J]. Journal of Frontiers of Computer Science and Technology, 2015, 9(9): 1132-1138.

[15] Eskicioglu A M, Fisher P S. Image quality measures and their performance[J]. IEEE Transactions on communications, 1995, 43(12): 2959–2965. DOI: 10.1109/26.477498

[16] Roberts J W, Aardt J A van, Ahmed F B. Assessment of image fusion procedures using entropy, image quality, and multispectral classification[J]. Journal of Applied Remote Sensing, 2008, 2(1): 023522.

[17] JIN Xin, NIE Rencan, ZHOU Dongming, et al. Multifocus color image fusion based on NSST and PCNN[J/OL]. Journal of Sensors, 2016, https://doi.org/10.1155/2016/8359602.

下载:

下载: