Infrared and Visible Image Fusion Based on Deep Image Decomposition

-

摘要:

红外与可见光图像融合是一种图像增强技术,其目标是为了获得保留有源图像优势的融合图像。对此本文提出了一种基于深度图像分解的红外与可见光图像融合方法。首先源图像经过编码器分解为背景特征图和细节特征图;同时编码器中引入显著性特征提取模块,突出源图像的边缘和纹理特征; 随后通过解码器获得融合图像。在训练过程中对可见光图像采用梯度系数惩罚进行正则化重建去保证纹理一致性;对图像分解,图像重建分别设计损失函数,以缩小背景特征图之间的差异,同时放大细节特征图之间的差异。实验结果表明,该方法可生成具有丰富细节和高亮目标的融合图像,在TNO和FLIR公开数据集上的主客观评价上优于其他对比方法。

Abstract:Infrared and visible light image fusion is an enhancement technique designed to create a fused image that retains the advantages of the source image. In this study, a depth image decomposition-based infrared and visible image fusion method is proposed. First, the source image is decomposed into the background feature map and detail feature map by the encoder; simultaneously, the saliency feature extraction module is introduced in the encoder to highlight the edge and texture features of the source image; subsequently, the fused image is obtained by the decoder. In the training process, a gradient coefficient penalty was applied to the visible image for regularized reconstruction to ensure texture consistency, and a loss function was designed for image decomposition and reconstruction to reduce the differences between the background feature maps and amplify the differences between the detail feature maps. The experimental results show that the method can generate fused images with rich details and bright targets. In addition, this method outperforms other comparative methods in terms of subjective and objective evaluations of the TNO and FLIR public datasets.

-

Keywords:

- image fusion /

- deep learning /

- saliency feature /

- multi-scale decomposition

-

0. 引言

红外与可见光融合(infrared and visible image fusion,IVIF)是一种特定的图像融合技术,可获取信息更丰富、更全面的图像,从而提供更佳的视觉感知和更精确的目标检测,适用于军事、安防、医学等应用领域[1]。红外图像和可见光图像在特性上存在差异,红外图像能够避免光照变化和人为干扰对视觉认知的影响,但其空间分辨率较低,纹理细节信息较差。而可见光图像具有较高的空间分辨率,包含丰富的外观和梯度信息,但容易受到障碍物和光反射的干扰。因此,红外图像和可见光图像两者融合,可同时保留红外图像的热辐射信息和可见光图像的形态特征,从而提高目标的识别和跟踪能力[2]。

目前,红外与可见光图像融合算法可以分为两大类:传统方法和基于深度学习的方法。传统的图像融合方法主要有基于多尺度变换的图像融合方法和基于稀疏表示的图像融合方法,而这些方法的计算代价比较高,而且针对不同的图像融合任务,往往需要设计不同的融合策略[3]。

为了去克服这些缺点,研究人员开始将深度学习的方法去应用到图像融合上。基于深度学习的方法主要可分为三类,第一类是多尺度变换的延伸,该类方法通过滤波或其他方式将图像从空间域转换为背景域和细节域,如Li等人基于VGG-19训练模型提出了一个深度学习融合框架,但是VGG网络结构对于图像特征提取的能力不够强,且只在融合阶段使用深度学习[4]。第二类是采取端到端的神经网络直接对图像进行融合[5],如FusionGAN,通过生成器和鉴别器之间的对抗性博弈过程,生成融合图像,然而该方法存在融合后的图像只与其中一幅源图像相似,导致另一幅源图像中的部分信息丢失[6]。第三类是基于自编码器(AE)的方法,如DenseFuse,在训练阶段自编码器网络被训练;随后测试阶段通过解码器恢复融合图像[7]。然而该方法使用传统融合规则实现中间特征融合,融合性能有限[8]。

综上,为能有效提取源图像特征,并在融合过程中能有效保留源图像的重要信息,获得具有丰富信息、对比度高、轮廓清晰的融合图像。本文结合第一类和第三类图像融合方法提出一种基于深度图像分解的红外与可见光图像融合方法,主要工作如下:

首先,设计了一个多尺度特征提取网络,该网络旨在分解输入的红外与可见光图像,以获取背景特征图和细节特征图,两类特征图分别包含大尺度像素变换的低频信息和小尺度像素变换的高频信息。这种分解能有效地捕捉图像中的信息,为后续任务提供更丰富的特征表示;然后在编码器中引入显著性特征提取模块,用于识别背景特征图和细节特征图中的显著性区域,提高模型的感知能力;其次为有效利用提取的源图像特征并在重建过程中保留输入图像的像素强度和纹理信息,设计了相应的损失函数,分别作用于图像的分解和重建;最后在TNO、FLIR公开数据集上与其他方法得到的融合结果进行了比较,通过主观视觉描述和客观评价指标对实验结果进行分析,本文方法可获取具有丰富细节和高亮目标的融合图像。

1. 所提算法结构

1.1 网络训练结构

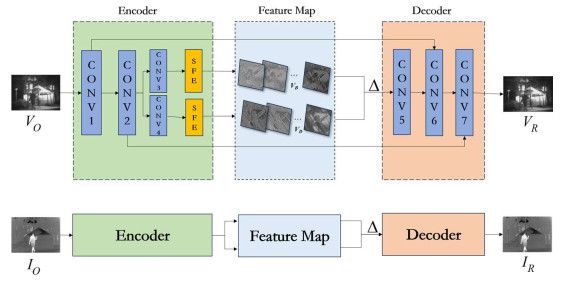

网络训练结构如图 1所示,主要由编码器、解码器两部分组成。其中VO、IO代表输入的可见光图像,红外图像,VR、IR代表重建后的可见光图像和红外图像,SFE代表显著性特征提取模块,Δ代表信道级联。编码器包括4层卷积层和显著性特征提取模块,其中融入了通道注意力机制,以帮助模型去自动学习并选择性抑制不同通道的特征表示[9]。在编码器中CONV3和CONV4的激活函数设置为Tanh函数,CONV3用来输出图像的背景信息,CONV4用来输出图像的细节信息。输入图像经过卷积层卷积操作后,会进行显著性特征提取,提取后的背景特征图(VB)和细节特征图(VD)会进行信道级联,随后输入到解码器中,在解码器的显著性特征提取模块中,激活函数设置为Sigmoid函数,这样可以将卷积输出的值映射到0到1的范围内,从而有效地控制显著性特征的激活强度。

解码器由3层卷积网络组成,编码器中CONV1的输出通过信道级联连接到解码器的CONV6输入,CONV2的输出也以相同的方式连接到解码器中CONV7的输入,这种操作帮助解码器利用不同层级的特征信息,以更好地保留图像细节,使解码器同时利用全局和局部特征信息,从而提高图像重建的质量[10]。

整个网络配置如表 1所示。其中CONV1和CONV7是与输入和输出相关的层,对CONV1和CONV7进行反射填充,这样可保持输入和输出图像的尺寸一致,更好地去处理边缘像素,防止融合图像的边缘出现伪影,避免信息损失。

表 1 本文网络配置Table 1. Network configuration in this paperLayers I O S Padding Activation CONV1 1 64 3 Reflection PReLU CONV2 64 64 3 0 PReLU CONV3 64 64 3 0 Tanh CONV4 64 64 3 0 Tanh SFE 64 64 1 0 Sigmoid CONV5 128 64 3 0 PReLU CONV6 64 64 3 0 PReLU CONV7 64 1 3 Reflection Sigmoid I代表输入通道数目,O代表输出通道数目,S代表卷积核大小。

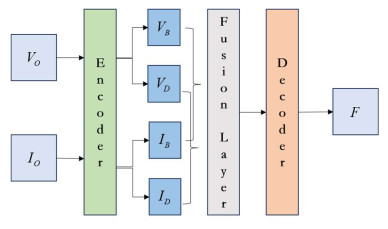

网络测试结构加入了融合层,在融合层中设置了求和策略,将提取到的背景特征和细节特征分别进行合并,随后输入到解码器中,得到融合图像,网络测试结构如图 2所示。

1.2 双尺度分解

双尺度分解属于多尺度变换的子集,可将原始图像分解为背景图像和细节图像,背景图像可通过解决以下优化问题获得[11],如红外图像的背景图IB:

$$ \begin{aligned} & I_{\mathrm{B}}=\arg \min \left\|I-I_{\mathrm{B}}\right\|_{\mathrm{F}}^2 \\ & +\mathit{λ}\left(\left\|g_x \otimes I_{\mathrm{B}}\right\|_{\mathrm{F}}^2+\left\|g_y \otimes I_{\mathrm{B}}\right\|_{\mathrm{F}}^2\right) \end{aligned} $$ (1) 式中:⊗代表卷积运算;gx、gy代表梯度核函数;随后红外图像的细节图ID则可通过以下方式获得:

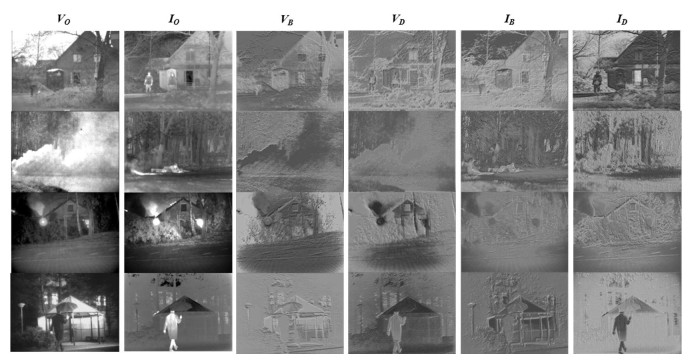

$$ I_{\mathrm{D}}=I_{\mathrm{O}}-I_{\mathrm{B}} $$ (2) 输入图像在编码器中经SFE会被分解为两种包含不同信息的特征图,图 3展示了由SFE生成的特征映射的第1个通道(通道数共64),VB和VD代表可见光图像分解后的背景特征图和细节特征图,IB和ID代表红外图像分解后的背景特征图和细节特征图。

2. 损失函数设计

2.1 图像分解损失函数设计

根据图 3的视觉观察,可以发现VB和IB所代表的背景特征信息在外观上相似,而VD和ID所代表的细节特征信息则存在较大差异。因此,在图像分解的损失函数中,需要增大细节特征图之间的差距,同时缩小背景特征图之间的差距,具体而言,可以通过调整损失函数的权重来实现这一目标。通过调整损失函数的权重,在图像分解过程中更好地平衡背景特征和细节特征之间的重要性。这样可以使得生成的图像更加准确地保留原始图像中的细节信息,并且在背景部分保持一致性和连贯性。

图像分解的损失函数定义如下:

$$ {L_D} = {\alpha _1}\tanh \left( {\mathtt{loss}\left( {{I_\mathtt{B}}, {V_\mathtt{B}}} \right)} \right) - {\alpha _2}\tanh \left( {\mathtt{loss}\left( {{I_\mathtt{D}}, {V_\mathtt{D}}} \right)} \right) $$ (3) 式中:Loss(IB, VB)、Loss(ID, VD)表示分别计算红外与可见光图像的对应两种特征图的绝对值损失,具体形式如下:

$$ \operatorname{Loss}\left(I_{\mathrm{B}}, V_{\mathrm{B}}\right)=\frac{1}{n} \sum\limits_{i=1}^n\left|V_{\mathrm{B}_i}-I_{\mathrm{B}_i}\right| $$ (4) $$ \operatorname{Loss}\left(I_{\mathrm{D}}, V_{\mathrm{D}}\right)=\frac{1}{n} \sum\limits_{i=1}^n\left|V_{\mathrm{D}_i}-I_{\mathrm{D}_i}\right| $$ (5) 式中:α1、α2表示调优参数。

2.2 图像重建损失函数设计

对于图像重建这一过程需要保留输入图像的像素强度和纹理信息,由于可见光图像较红外图像而言有着更为丰富的纹理信息,因此采用梯度系数惩罚对可见光图像进行正则化处理,对图像重建的损失函数定义如下:

$$ \begin{aligned} L_{\mathrm{R}}&= \alpha_3 \operatorname{SSIM}\left(V_{\mathrm{O}}, V_{\mathrm{R}}\right)+\operatorname{MSELoss}\left(V_{\mathrm{O}}, V_{\mathrm{R}}\right) \\ & +\alpha_4 \operatorname{SSIM}\left(I_{\mathrm{O}}, I_{\mathrm{R}}\right)+\operatorname{MSELoss}\left(I_{\mathrm{O}}, I_{\mathrm{R}}\right) \\ & +\alpha_5 \operatorname{loss}\left(\operatorname{SG}\left(V_{\mathrm{O}}\right), \operatorname{SG}\left(V_{\mathrm{R}}\right)\right) \end{aligned} $$ (6) 式中:VO、IO代表输入的可见光图像和红外图像;VR、IR代表重建后的可见光和红外图像。SG(VO)、SG(VR)是指计算VO、VR的空间频率。SSIM是结构相似性指数,考虑了图像的亮度、对比度、结构等方面的信息。

$$ \operatorname{SSIM}\left(X_{\mathrm{O}}, X_{\mathrm{R}}\right)=\frac{\left(2 \mu_{X_\mathrm{O}} \mu_{X_{\mathrm{R}}}+C_1\right)\left(2 \sigma_{X_{\mathrm{O}}} \sigma_{X_{\mathrm{R}}}+C_2\right)}{\left(\mu_{X_{\mathrm{O}}}^2+\mu_{X_{\mathrm{R}}}^2+C_1\right)\left(\sigma_{X_{\mathrm{O}}}^2+\sigma_{X_{\mathrm{R}}}^2+C_2\right)} $$ (7) XO、XR代表上述的输入图像和重建图像,以平均灰度来作为亮度的估计:

$$ {\mu _{{X_\mathsf{O}}}} = \frac{1}{N}\sum\limits_{i = 1}^N {{X_{{\mathsf{O}_i}}}} $$ (8) $$ {\mu _{{X_\mathtt{R}}}} = \frac{1}{N}\sum\limits_{i = 1}^N {{X_{{\mathtt{R}_i}}}} $$ (9) 使用标准差来衡量图像的对比度:

$$ {\sigma _{{X_\mathsf{O}}}} = {\left( {\frac{1}{{N - 1}}\sum\limits_{i = 1}^N {{{\left( {{X_{{\mathrm{O}_i}}} - {\mu _{{X_\mathrm{O}}}}} \right)}^2}} } \right)^{\frac{1}{2}}} $$ (10) $$ {\sigma _{{X_\mathrm{R}}}} = {\left( {\frac{1}{{N - 1}}\sum\limits_{i = 1}^N {{{\left( {{X_{{\mathrm{R}_i}}} - {\mu _{{X_\mathrm{R}}}}} \right)}^2}} } \right)^{\frac{1}{2}}} $$ (11) $$ {\sigma _{{X_\mathrm{O}}, {X_\mathrm{R}}}} = \frac{1}{{N - 1}}\sum\limits_{i = 1}^N {\left( {{X_{{\mathrm{O}_i}}} - {\mu _{{X_\mathrm{O}}}}} \right)\left( {{X_{{\mathrm{R}_i}}} - {\mu _{{X_\mathrm{R}}}}} \right)} $$ (12) 通过最小化均方误差损失,使得重建图像尽可能去接近原始图像,以提高融合图像的质量。

$$ \mathrm{MSELoss}\left( {{X_\mathrm{O}}, {X_\mathrm{R}}} \right) = \frac{1}{N}{\sum\limits_{i = 1}^N {\left( {{X_{{\mathrm{O}_i}}} - {X_{{\mathrm{R}_i}}}} \right)} ^2} $$ (13) C1、C2是两个较小的常数,可防止分母为零。α3、α4、α5是调优参数。

训练过程中总损失Ltotal可表示为:

$$ {L_\mathrm{total}} = {L_\mathrm{D}} + {L_\mathrm{R}} $$ (14) 2.3 融合策略

融合策略在红外与可见光图像融合中起着重要的作用,本文融合层的融合策略为相加融合,相加融合是对对应的特征图进行简单的加和操作,将两个特征图对应位置的像素值进行简单相加,得到加和后的特征图,该策略简单直观,易于实现。

$$ \begin{array}{*{20}{c}} {{F_1} = {V_1} \oplus {I_1}} \\ {{F_2} = {V_2} \oplus {I_2}} \\ \begin{gathered} {F_\mathrm{B}} = {V_\mathrm{B}} \oplus {I_\mathrm{B}} \hfill \\ {F_\mathrm{D}} = {V_\mathrm{D}} \oplus {I_\mathrm{D}} \hfill \\ \end{gathered} \end{array} $$ (15) 式中:V1、V2代表可见光图像经过CONV1,CONV2的输出;I1、I2则代表红外图像经过卷积层的输出。VB、VD表示可见光图像经分解后得到的背景特征图和细节特征图;IB、ID则代表红外图像经分解后的结果。将得到的特征图F1、F2、FB、FD作为解码器的输入,得到融合的图像,⊕代表对应的像素相加。

3. 实验与分析

3.1 模型训练

训练模型所使用的红外与可见光输入源图像来源于TNO公共数据集,该数据集包含多种与军事相关的多光谱夜间图像。这些图像经过不同波段的相机系统进行配准,并提供了多种不同分辨率的灰度图像。为扩大实验训练所需的数据集,本文在训练前所有图像均已转换为灰度图像,选取数据集中36对红外与可见光图像,按照步长为12,128×128像素对数据集图像进行中心裁剪,共得到42233对红外与可见光图像作为训练集。

训练中所有参数设置如下:batchsize设为24,epoch设为180,并使用学习率初始值为0.001的Adam优化器,采用了学习率衰减策略,每60个epoch将学习率减少到原来的十分之一,以更好地调整网络参数以最小化损失函数。损失函数中的调优参数设置为α1=1,α2=0.5,α3=5,α4=5,α5=10。

本文实验在配置为Intel core i5-13400F,主频2.5 GHz,32G RAM,RTX 4070, Windows11系统下的计算机上运行,在PyTorch平台上实现。

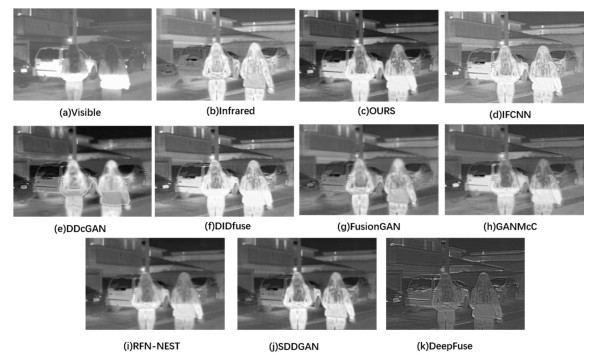

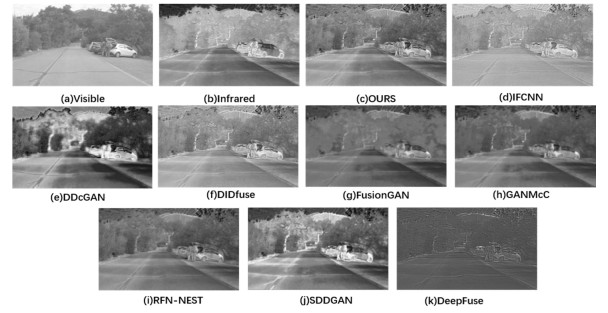

为了全面评估所提出的算法,本文选择TNO数据集和FILR数据集中各40对红外与可见光图像进行测试,从中各选取2组代表性图像进行展示,同IFCNN[12]、DDcGAN[13]、DIDFuse、FusionGAN、GANMcC[14]、RFN-NEST、SDDGAN、DeepFuse[15]这8种已公开且具有代表性的可见光与红外图像融合方法和本文所设计方法进行定性和定量比较,这8种方法的参数均使用文献中的默认参数。客观评价使用信息熵(information entropy,EN),空间频率(spatial frequency,SF),平均梯度(average gradient,AG),视觉信息保真度(visual information fidelity,VIF),峰值信噪比(peak signal-to-noise ratio,PSNR),互信息(mutual information,MI),标准差(standard deviation,SD)这7种定量评价指标对算法融合结果进行质量分析,这7种指标分别表示融合图像的清晰程度,信息量以及融合图像与源图像之间的差异。

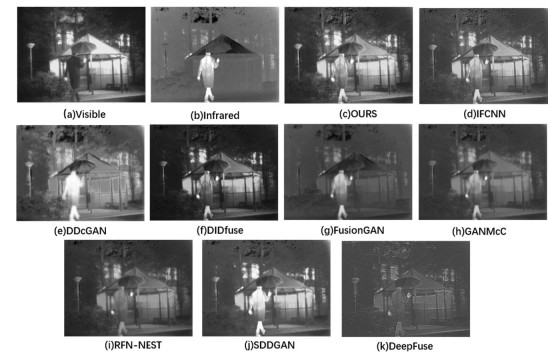

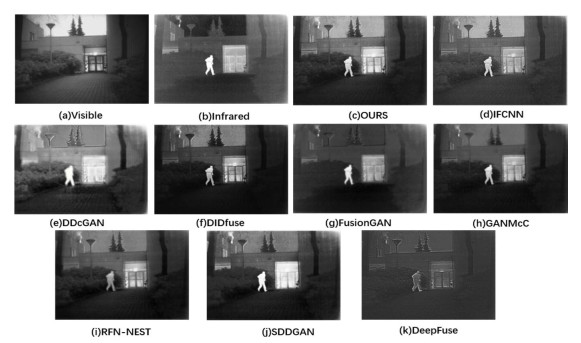

3.2 融合结果的主观评价

由图 4~图 7可知,IFCNN方法的融合结果在高光对象的表现上较为薄弱,并且对比度较差,相较于本文提出的方法相比存在明显的不足;FusionGAN方法得到的图像融合结果清晰度不高且融合图像的背景轮廓不突出;DDcGAN、GANMcC、SDDGAN这3种方法也采用了生成对抗网络的方法,其融合结果的细节和对比度优于FusionGAN,但同本文融合结果相比存在图像较模糊,背景物体的轮廓没有很好保留的问题,而且生成的图像有伪影,如图 4、图 5、图 6中有“人”的情况时最为明显。DIDfuse其融合结果保留了较好的纹理信息和红外目标信息,然而与本文方法相比,视觉上过于灰暗且图像细节不够丰富,如图 4背景的“路灯”信息,“草丛”的轮廓,图 5的“地面”信息,图 7的“山”和“树木”信息。RFN-NEST方法的融合结果没有很好地保留红外图像的信息,而且生成图像整体偏暗,视觉效果不佳;DeepFuse方法生成的图片包含的信息较少,而且对比度比较差,尤其是在自然景观图像,很难获取到有用的信息。

综合各种融合方法对比结果,其他方法存在对比度差、目标和背景轮廓不突出、“山”和“树木”边界模糊等问题。相比之下,本文方法能够获得具有明亮目标、轮廓边缘清晰、细节丰富的融合图像。

3.3 融合结果的客观评价

本文采取的评价指标的值均与融合图像的质量成正比例关系。表 2、表 3给出了测试集中所有图片客观评价指标的平均值,最优值用红色表示,次优值用绿色,第三优值用蓝色。

表 2 TNO数据集融合结果客观评价指标对比Table 2. Comparison of objective evaluation indicators for TNO dataset fusion resultsEN SF AG VIF PSNR MI SD Ours 7.410 13.446 5.220 0.631 62.519 2.208 44.064 DDcGAN 7.485 13.283 5.374 0.513 60.939 1.838 50.416 DIDFUSE 6.816 12.311 4.531 0.597 61.658 2.207 41.974 FusionGAN 6.468 6.453 2.488 0.410 61.319 2.213 28.634 GANMcC 6.670 6.447 2.650 0.517 62.265 2.250 32.664 RFN-NEST 6.962 6.320 2.876 0.550 63.089 2.195 37.670 SDDGAN 7.188 9.597 3.882 0.554 61.868 2.223 48.578 IFCNN 6.970 13.319 5.158 0.628 63.641 2.158 40.248 DeepFuse 5.704 13.422 4.813 0.074 61.733 0.780 15.780 表 3 FLIR数据集融合结果客观评价指标对比Table 3. Comparison of objective evaluation indicators for FLIR dataset fusion resultsEN SF AG VIF PSNR MI SD Ours 7.367 17.134 6.625 0.642 62.579 2.692 49.959 DDcGAN 7.593 13.148 5.182 0.393 60.112 2.452 56.133 DIDFUSE 7.376 12.563 6.032 0.558 61.950 2.683 48.315 FusionGAN 7.029 8.831 3.455 0.339 59.606 2.677 37.268 GANMcC 7.204 8.766 3.772 0.450 60.144 2.552 42.176 RFN-NEST 7.248 8.487 3.671 0.464 60.342 2.586 43.801 SDDGAN 7.499 10.852 4.648 0.455 59.976 2.873 56.163 IFCNN 7.111 16.315 6.465 0.524 62.360 2.660 38.091 DeepFuse 5.870 14.370 5.130 0.079 58.760 1.101 16.943 TNO数据集融合结果对比中,本文方法在EN这一指标处于次优值,说明得到的融合图像所包含的信息比较丰富。TNO数据集中本文方法在SF、VIF这2个指标中取得最优值,SF数值高说明该方法融合图像中有更多的细节和高频信息,VIF数值高说明生成图像与主观视觉有着较高的一致性。

本文方法是在TNO数据集上训练的,但是在FLIR数据集中,SF、AG、VIF、PSNR这4个指标中取得最优值,说明本文方法具有较强的适用性。AG值最优说明本文方法生成图像清晰度最高,而PSNR值最优说明本文方法生成图像质量高于其他方法,符合本文的设计目标,能够获得目标高亮且包含丰富细节的融合图像。

3.4 不同融合策略在该算法下的结果

图像融合中融合策略起着至关重要的作用,为验证不同融合策略对该算法的影响,将融合层的融合策略设置为加权融合策略(L1 Norm),其余设置均与本文算法相同。

加权融合策略是指首先对输入的特征图,进行绝对值操作,得到它们的活动图,然后构建一个窗口大小为3×3的均匀权重卷积核,对活动图进行卷积操作,以计算每个像素点周围窗口的平均值,得到加权后的活动图,根据加权后的活动图去确定特征图在融合中的权重比例,随后将特征图分别乘以对应的权重并将结果相加,得到最终融合所需的张量。图 8为本文融合策略和在加权融合策略下的实验对比结果。

表 4展示了TNO数据集中不同融合策略的各项评价指标结果,表 5则呈现了FLIR数据集的验证结果。本文方法在EN、SF、AG、PSNR、SD指标上表现更优,说明本文融合策略在保留细节和增强噪声方面更有效,图像融合结果的清晰度和对比度明显优于加权融合策略。VIF和MI指标上,本文融合策略略逊于L1 Norm,说明L1 Norm在保持图像结构方面更好。

表 4 不同融合策略结果客观评价指标对比(TNO)Table 4. Comparison of objective evaluation indicators of the results of different integration strategies (TNO)EN SF AG VIF PSNR MI SD Ours 7.41 13.446 5.22 0.631 62.519 2.208 44.064 L1 Norm 6.614 8.142 3.062 0.602 61.651 2.821 33.609 表 5 不同融合策略结果客观评价指标对比(FLIR)Table 5. Comparison of objective evaluation indicators of the results of different integration strategies (FLIR)EN SF AG VIF PSNR MI SD Ours 7.367 17.134 6.625 0.642 62.579 2.692 49.959 L1 Norm 7.113 11.608 4.691 0.547 62.299 2.948 39.677 加权融合策略对比本文策略,更加关注两幅图像中像素值的差异,计算程度要复杂的多,但是生成图像质量并没有因为计算程度复杂而提高,反而在一些情况下会引入噪声,产生伪影。

4. 结束语

本文提出了一种基于深度图像分解的红外与可见光图像融合方法,通过该方法可得到在保留源图像优势的高质量融合图像,特别的是在本文提出的方法上,编码器中融入了显著性特征提取模块,突出了目标的边缘和纹理特征。在公开数据集TNO和FLIR进行实验,从主观上可以看出,本文方法得到的融合图像亮度合理,细节纹理清晰,客观评价指标表明,多数指标优于对比算法,满足预期效果。不足的是大多数图像融合结果都是针对正常光照条件下的图像,对于低光照图像融合有所欠缺,未来也将会针对这些方面做出相应的改进。

-

表 1 本文网络配置

Table 1 Network configuration in this paper

Layers I O S Padding Activation CONV1 1 64 3 Reflection PReLU CONV2 64 64 3 0 PReLU CONV3 64 64 3 0 Tanh CONV4 64 64 3 0 Tanh SFE 64 64 1 0 Sigmoid CONV5 128 64 3 0 PReLU CONV6 64 64 3 0 PReLU CONV7 64 1 3 Reflection Sigmoid 表 2 TNO数据集融合结果客观评价指标对比

Table 2 Comparison of objective evaluation indicators for TNO dataset fusion results

EN SF AG VIF PSNR MI SD Ours 7.410 13.446 5.220 0.631 62.519 2.208 44.064 DDcGAN 7.485 13.283 5.374 0.513 60.939 1.838 50.416 DIDFUSE 6.816 12.311 4.531 0.597 61.658 2.207 41.974 FusionGAN 6.468 6.453 2.488 0.410 61.319 2.213 28.634 GANMcC 6.670 6.447 2.650 0.517 62.265 2.250 32.664 RFN-NEST 6.962 6.320 2.876 0.550 63.089 2.195 37.670 SDDGAN 7.188 9.597 3.882 0.554 61.868 2.223 48.578 IFCNN 6.970 13.319 5.158 0.628 63.641 2.158 40.248 DeepFuse 5.704 13.422 4.813 0.074 61.733 0.780 15.780 表 3 FLIR数据集融合结果客观评价指标对比

Table 3 Comparison of objective evaluation indicators for FLIR dataset fusion results

EN SF AG VIF PSNR MI SD Ours 7.367 17.134 6.625 0.642 62.579 2.692 49.959 DDcGAN 7.593 13.148 5.182 0.393 60.112 2.452 56.133 DIDFUSE 7.376 12.563 6.032 0.558 61.950 2.683 48.315 FusionGAN 7.029 8.831 3.455 0.339 59.606 2.677 37.268 GANMcC 7.204 8.766 3.772 0.450 60.144 2.552 42.176 RFN-NEST 7.248 8.487 3.671 0.464 60.342 2.586 43.801 SDDGAN 7.499 10.852 4.648 0.455 59.976 2.873 56.163 IFCNN 7.111 16.315 6.465 0.524 62.360 2.660 38.091 DeepFuse 5.870 14.370 5.130 0.079 58.760 1.101 16.943 表 4 不同融合策略结果客观评价指标对比(TNO)

Table 4 Comparison of objective evaluation indicators of the results of different integration strategies (TNO)

EN SF AG VIF PSNR MI SD Ours 7.41 13.446 5.22 0.631 62.519 2.208 44.064 L1 Norm 6.614 8.142 3.062 0.602 61.651 2.821 33.609 表 5 不同融合策略结果客观评价指标对比(FLIR)

Table 5 Comparison of objective evaluation indicators of the results of different integration strategies (FLIR)

EN SF AG VIF PSNR MI SD Ours 7.367 17.134 6.625 0.642 62.579 2.692 49.959 L1 Norm 7.113 11.608 4.691 0.547 62.299 2.948 39.677 -

[1] TANG L F, YUAN J, MA J Y. Image fusion in the loop of high-level vision tasks: a semantic-aware real-time infrared and visible image fusion network[J]. Information Fusion, 2022, 82: 28-42. DOI: 10.1016/j.inffus.2021.12.004

[2] 罗迪, 王从庆, 周勇军. 一种基于生成对抗网络与注意力机制的可见光和红外图像融合方法[J]. 红外技术, 2021, 43(6): 566-574. http://hwjs.nvir.cn/article/id/3403109e-d8d7-45ed-904f-eb4bc246275a LUO Di, WANG Congqing, ZHOU Yongjun. A visible and infrared image fusion method based on generative adversarial networks and attention mechanism[J]. Infrared Technology, 2021, 43(6): 566-574. http://hwjs.nvir.cn/article/id/3403109e-d8d7-45ed-904f-eb4bc246275a

[3] ZHANG X. Deep learning-based multi-focus image fusion: a survey and a comparative study[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 44(9): 4819-4838. http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9428544

[4] MA Jiayi, ZHOU Yi. Infrared and visible image fusion via gradientlet filter[J]. Computer Vision and Image Understanding, 2020(197-198): 103016.

[5] LI G, LIN Y, QU X. An infrared and visible image fusion method based on multi-scale transformation and norm optimization[J]. Information Fusion, 2021, 71: 109-129. DOI: 10.1016/j.inffus.2021.02.008

[6] MA J Y, YU W, LIANG P W, et al. FusionGAN: a generative adversarial network for infrared and visible image fusion[J]. Information Fusion, 2019, 48: 11-26. DOI: 10.1016/j.inffus.2018.09.004

[7] 刘佳, 李登峰. 马氏距离与引导滤波加权的红外与可见光图像融合[J]. 红外技术, 2021, 43(2): 162-169. http://hwjs.nvir.cn/article/id/56484763-c7b0-4273-a087-8d672e8aba9a LIU Jia, LI Dengfeng. Infrared and visible light image fusion based on Mahalanobis distance and guided filter weighting[J]. Infrared Technology, 2021, 43(2): 162-169. http://hwjs.nvir.cn/article/id/56484763-c7b0-4273-a087-8d672e8aba9a

[8] TANG L F, YUAN J, MA J Y, et al. PIAFusion: a progressive infrared and visible image fusion network based on illumination aware[J]. Information Fusion, 2022, 83: 79-92. http://www.sciencedirect.com/science/article/pii/S156625352200032X?dgcid=rss_sd_all

[9] TANG L F, XIANG X Y, ZHANG H, et al. DIVFusion: darkness-free infrared and visible image fusion[J]. Information Fusion, 2023, 91: 477-493. DOI: 10.1016/j.inffus.2022.10.034

[10] YU F, JUN X W, Tariq D. Image fusion based on generative adversarial network consistent with perception[J]. Information Fusion, 2021, 72: 110-125. DOI: 10.1016/j.inffus.2021.02.019

[11] 何乐, 李忠伟, 罗偲, 等. 基于空洞卷积与双注意力机制的红外与可见光图像融合[J]. 红外技术, 2023, 45(7): 732-738. http://hwjs.nvir.cn/article/id/66ccbb6b-ad75-418f-ae52-cc685713d91b HE Le, LI Zhongwei, LUO Cai, et al. Infrared and visible image fusion based on cavity convolution and dual attention mechanism[J]. Infrared Technology, 2023, 45(7): 732-738. http://hwjs.nvir.cn/article/id/66ccbb6b-ad75-418f-ae52-cc685713d91b

[12] ZHANG Y, LIU Y, SUN P, et al. IFCNN: a general image fusion framework based on convolutional neural network[J]. Information Fusion, 2020, 54: 99-118. DOI: 10.1016/j.inffus.2019.07.011

[13] MA J Y, XU H, JIANG J, et al. DDcGAN: a dual-discriminator conditional generative adversarial network for multi-resolution image fusion [J]. IEEE Transactions on Image Processing, 2020, 29: 4980-4995. http://www.xueshufan.com/publication/3011768656

[14] MA J, ZHANG H, SHAO Z, et al. GANMcC: a generative adversarial network with multiclassification constraints for infrared and visible image fusion[J]. IEEE Transactions on Instrumentation and Measurement, 2021, 70: 1-14. http://doc.paperpass.com/foreign/rgArti2020150972012.html

[15] Prabhakar K R, Srikar V S, Babu R V. DeepFuse: a deep unsupervised approach for exposure fusion with extreme exposure image pairs[C]// IEEE International Conference on Computer Vision (ICCV), 2017: 4724-4732.

下载:

下载: