Human Fall Detection Method Based on Key Points in Infrared Images

-

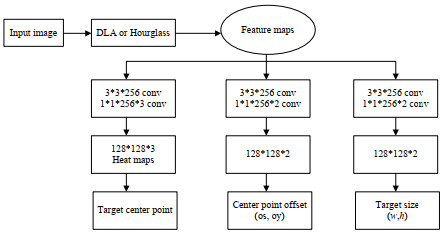

摘要: 针对已有人体摔倒检测方法在复杂环境场景下易受光照影响、适应性差、误检率高等问题,提出了一种基于关键点估计的红外图像人体摔倒检测方法。该方法采用红外图像,有效避免了光照等因素的影响,经过神经网络找到人体目标中心点,然后回归人体目标属性,如目标尺寸、标签等,从而得到检测结果。使用红外相机采集不同情况下的人体摔倒图像,建立红外图像人体摔倒数据集并使用提出的方法进行检测,识别率达到97%以上。实验结果表明提出的方法在红外图像人体摔倒检测中具有较高的精度与速度。Abstract: To address the problems with existing human fall detection methods for complex environments, which are susceptible to light, poor adaptability, and high false detection rates, an infrared image human fall detection method based on key point estimation is proposed. This method uses infrared images, which effectively eliminates the influence of factors such as lighting; first, the center point of the human target is found through a neural network, and second, the human target attributes, such as the target size and label, are regressed to obtain detection results. An infrared camera was used to collect human body fall images in different situations and establish datasets containing infrared images of human falls. The proposed method was used for experiments; the recognition rate exceeded 97%. The experimental results show that the proposed method has a higher accuracy and speed than other two methods in infrared image human fall detection.

-

Key words:

- infrared image /

- key point estimation /

- fall detection /

- neural network

-

表 1 对比实验结果

Table 1. Comparison of experimental results

Algorithm Accuracy/(%) Time/(ms/frame) Yolo v3 96.9 0.0421 Faster RCNN 95.7 0.441 Ours 98.4 0.0462 -

[1] Santos G, Endo P, Monteiro K, et al. Accelerometer-based human fall detection using convolutional neural networks[J]. Sensors, 2019, 19(7): 1644. doi: 10.3390/s19071644 [2] Gia T N, Sarker V K, Tcarenko I, et al. Energy efficient wearable sensor node for IoT-based fall detection systems[J]. Microprocessors and Microsystems, 2018, 56: 34-46. doi: 10.1016/j.micpro.2017.10.014 [3] Nadee C, Chamnongthai K. Multi sensor system for automatic fall detection[C]//2015 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA) of IEEE, 2015: DOI: 10.1109/APSIPA.2015.7415408. [4] Tzeng H W, CHEN M Y, CHE J Y. Design of fall detection system with floor pressure and infrared im age[C]//2010 International Conference on System Science and Engineering of IEEE, 2010: 131-135. [5] Kerdegari H, Samsudin K, Rahman Ramli A, et al. Development of wearable human fall detection system using multilayer perceptron neural network[J]. International Journal of Computational Intelligence Systems, 2013, 6(1): 127-136. doi: 10.1080/18756891.2013.761769 [6] LIU Chengyin, JIANG Zhaoshuo, SU Xiangxiang, et al. Detection of human fall using floor vibration and multi-features semi-supervised SVM[J]. Sensors, 2019, 19(17): 3720(doi: 10.3390/s19173720). [7] Mazurek P, Wagner J, Morawski R Z. Use of kinematic and mel- cepstrum-related features for fall detection based on data from infrared depth sensors[J]. Biomedical Signal Processing and Control, 2018, 40: 102-110. doi: 10.1016/j.bspc.2017.09.006 [8] MIN W, CUI H, RAO H, et al. Detection of human falls on furniture using scene analysis based on deep learning and activity characteristics[J/OL]. IEEE Access, 2018, 6: 9324-9335. [9] FENG W, LIU R, ZHU M. Fall detection for elderly person care in a vision-based home surveillance environment using a monocular camera[J]. Signal, Image and Video Processing, 2014, 8(6): 1129-1138. doi: 10.1007/s11760-014-0645-4 [10] 邓志锋, 闵卫东, 邹松. 一种基于CNN和人体椭圆轮廓运动特征的摔倒检测方法[J]. 图学学报, 2018, 39(6): 30-35. https://www.cnki.com.cn/Article/CJFDTOTAL-GCTX201806005.htmDENG Zhifeng, MIN Weidong, ZOU Song. A fall detection method based on CNN and human elliptical contour motion features[J]. Journal of Graphics, 2018, 39(6): 30-35. https://www.cnki.com.cn/Article/CJFDTOTAL-GCTX201806005.htm [11] 卫少洁, 周永霞. 一种结合Alphapose和LSTM的人体摔倒检测模型[J]. 小型微型计算机系统, 2019, 40(9): 1886-1890. doi: 10.3969/j.issn.1000-1220.2019.09.014WEI Shaojie, ZHOU Yongxia. A human fall detection model combining alphapose and LSTM[J]. Minicomputer System, 2019, 40(9): 1886-1890. doi: 10.3969/j.issn.1000-1220.2019.09.014 [12] 赵芹, 周涛, 舒勤. 飞机红外图像的目标识别及姿态判断[J]. 红外技术, 2007, 29(3): 167-169. doi: 10.3969/j.issn.1001-8891.2007.03.012ZHAO Qin, ZHOU Tao, SHU Qin. Target recognition and attitude judgment of aircraft infrared image[J]. Infrared Technology, 2007, 29(3): 167-169. doi: 10.3969/j.issn.1001-8891.2007.03.012 [13] ZHOU X, WANG D, Krhenbühl P. Objects as points [J/OL]. [2019-04-25]. arXiv: 1904.07850(https://arxiv.org/abs/1904.07850). [14] YU F, WANG D, Shelhamer E, et al. Deep layer aggregation[J/OL]. [2019-01-04]. arXiv: 1707.06484(https://arxiv.org/abs/1707.06484) [15] Law H, DENG J. CornerNet: detecting objects as paired keypoints[J]. International Journal of Computer Vision, 2020, 128(3): 642-656. doi: 10.1007/s11263-019-01204-1 [16] LIN T Y, Goyal P, Girshick R, et al. Focal loss for dense object detection[J]. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2017: DOI: 10.1109/ICCV. 2017.324. http://fcv2011.ulsan.ac.kr/files/announcement/657/1708.02002.pdf -

下载:

下载: