Saliency-based Multiband Image Synchronization Fusion Method

-

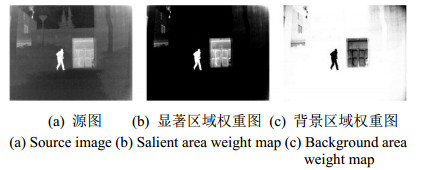

摘要: 针对多波段融合图像存在对比度低、显著目标不突出的问题,本文提出了一种基于显著性的多波段图像同步融合方法。首先,近红外图像被用来作为数据保真项,红外图像和可见光图像分别为融合结果提供红外显著信息和细节信息;其次,基于视觉显著的红外显著区域提取方法被用来构造权重图,以克服融合结果显著区域不突出和边缘模糊问题;最后,采用交替方向乘子法(alternating direction method of multipliers, ADMM)来求解模型,得到融合结果。研究结果表明,较于代表性图像融合算法,所提算法能在保留红外图像热辐射信息的同时,保有较好的清晰细节,并在多项客观评价指标上优于代表性算法。Abstract: To address the problem of low contrast and inconspicuous salient objects in multi-band image fusion, this paper presents a saliency-based multiband image synchronous fusion method. First, a near-infrared image was used as a data fidelity item, and infrared and visible light images provided salient and detailed information on the results of fusion, respectively. Second, a visual saliency-based infrared salient region extraction method was used to construct a weight map to overcome unsalient regions and blurred edges in the results of fusion. Finally, the alternating direction method of multipliers (ADMM) was used to solve the model and obtain the results of fusion. The research results showed that, compared with a representative image fusion algorithm, the proposed algorithm retained the thermal radiation information of the infrared image while retaining clearer details, and was better in many objective evaluation indicators.

-

Key words:

- image fusion /

- multi-band images /

- saliency /

- total variation

-

表 1 图 2中虚线区域客观评价指标

Table 1. The quantitative comparisons of the region surrounded by the dotted line in Fig. 2

$ {w_1} $ $ {w_2} $ EN C PSNR SSIM CC AG MI 0.5 0.1 6.891 26.469 38.267 0.265 0.436 2.972 5.784 0.5 6.893 26.083 38.565 0.329 0.435 2.417 5.501 0.7 6.910 25.882 38.692 0.357 0.433 2.227 5.309 0.7 0.5 6.767 23.654 39.580 0.319 0.439 3.281 5.185 0.7 6.813 24.208 39.417 0.346 0.437 3.194 5.047 1.3 6.887 24.928 39.018 0.421 0.431 2.966 4.656 1.3 0.7 6.320 16.222 34.579 0.289 0.434 2.622 4.445 1.3 6.444 17.739 36.047 0.366 0.432 2.515 4.225 2 6.579 19.452 37.359 0.439 0.426 2.495 3.963 2 1.3 6.003 11.476 29.331 0.270 0.414 2.138 3.814 2 6.128 12.831 30.582 0.336 0.415 2.096 3.648 2.5 6.217 13.862 31.478 0.380 0.413 2.095 3.532 2.5 2 5.895 10.012 27.508 0.272 0.399 1.944 3.453 2.5 5.978 10.794 28.226 0.310 0.401 1.932 3.362 表 2 各算法评价指标

Table 2. Evaluation indexes of ten algorithms

Image Metrics GTF DTCWT NSST_NSCT ADF MDDR Dual Branch U2Fusion Fusion GAN Proposed Kaptein_1123 SD 37.34 39.87 35.41 38.04 50.55 24.34 41.50 27.98 45.95 EN 6.94 6.89 6.83 6.79 7.20 6.48 7.04 6.33 7.24 C 23.93 28.41 25.16 27.39 36.39 17.55 30.57 15.44 33.39 MI 3.38 2.96 2.57 3.36 3.29 2.61 2.58 3.43 3.50 SSIM 0.45 0.43 0.44 0.48 0.53 0.21 0.37 0.20 0.57 SF 7.08 9.55 10.30 5.91 8.42 4.79 11.56 3.94 7.64 Movie_01 SD 39.01 34.80 31.53 33.79 40.70 17.11 35.43 23.50 51.44 EN 6.77 6.49 6.39 6.35 6.51 5.98 6.94 5.95 6.84 C 31.05 23.52 20.65 22.91 26.77 12.89 23.99 13.92 35.06 MI 5.03 3.94 3.27 4.58 3.72 2.87 2.88 3.54 4.47 SSIM 0.16 0.19 0.21 0.32 0.32 0.05 0.19 0.10 0.53 SF 3.32 9.77 10.13 10.27 7.13 4.44 11.73 2.64 5.30 soldier_behind_smoke SD 42.88 27.90 24.89 26.18 30.61 35.13 35.26 24.93 45.24 EN 7.26 6.74 6.61 6.62 6.92 6.97 7.14 6.35 7.35 C 32.82 21.48 18.09 20.82 24.36 26.46 28.03 15.37 35.74 MI 3.48 2.51 1.86 3.12 1.97 3.53 1.80 2.33 3.16 SSIM 0.44 0.21 0.27 0.19 0.34 0.02 0.27 0.32 0.47 SF 8.93 11.43 12.61 9.78 10.35 8.18 13.21 5.18 8.01 Marne_04 SD 53.09 26.60 24.47 25.06 31.79 26.51 49.42 25.06 53.57 EN 7.57 6.69 6.63 6.58 6.99 6.54 7.60 6.58 7.62 C 47.65 20.95 18.69 19.74 24.63 19.72 40.38 19.74 43.29 MI 4.54 2.41 1.76 2.72 2.30 3.13 1.88 2.72 3.40 SSIM 0.21 0.26 0.26 0.20 0.36 0.29 0.22 0.20 0.54 SF 5.73 7.66 8.34 6.17 7.14 3.38 10.77 6.17 7.46 Marne_06 SD 47.39 27.90 25.76 26.78 34.65 28.29 46.15 46.67 53.53 EN 7.30 6.77 6.70 6.70 7.05 6.67 7.48 7.33 7.59 C 35.68 22.07 20.34 21.26 28.08 21.05 36.61 35.07 42.88 MI 4.26 2.95 2.31 3.23 2.69 2.74 2.48 3.07 3.46 SSIM 0.30 0.37 0.35 0.31 0.37 0.11 0.30 0.23 0.57 SF 6.05 7.00 7.66 4.74 6.44 3.82 10.15 5.95 5.27 -

[1] LIU Y, LIU S, WANG Z. A general framework for image fusion based on multi-scale transform and sparse representation[J]. Information Fusion, 2015, 24: 147-164. doi: 10.1016/j.inffus.2014.09.004 [2] YU B, JIA B, DING L, et al. Hybrid dual-tree complex wavelet transform and support vector machine for digital multi-focus image fusion[J]. Neurocomputing, 2016, 182: 1-9. doi: 10.1016/j.neucom.2015.10.084 [3] LIN S, HAN Z, LI D, et al. Integrating model-and data-driven methods for synchronous adaptive multi-band image fusion[J]. Information Fusion, 2020, 54: 145-160. doi: 10.1016/j.inffus.2019.07.009 [4] ZHANG H, LE Z, SHAO Z, et al. MFF-GAN: an unsupervised generative adversarial network with adaptive and gradient joint constraints for multi-focus image fusion[J]. Information Fusion, 2021, 66: 40-53. doi: 10.1016/j.inffus.2020.08.022 [5] Toet A. Image fusion by a ratio of low-pass pyramid[J]. Pattern Recognition Letters, 1989, 9(4): 245-253. doi: 10.1016/0167-8655(89)90003-2 [6] Lewis J J, O'Callaghan R J, Nikolov S G, et al. Pixel-and region-based image fusion with complex wavelets[J]. Information Fusion, 2007, 8(2): 119-130. doi: 10.1016/j.inffus.2005.09.006 [7] Nencini F, Garzelli A, Baronti S, et al. Remote sensing image fusion using the curvelet transform[J]. Information Fusion, 2007, 8(2): 143-156. doi: 10.1016/j.inffus.2006.02.001 [8] Vishwakarma A, Bhuyan M K. Image fusion using adjustable non-subsampled shearlet transform[J]. IEEE Transactions on Instrumentation and Measurement, 2018, 68(9): 3367-3378. [9] LI H, CEN Y, LIU Y, et al. Different input resolutions and arbitrary output resolution: a meta learning-based deep framework for infrared and visible image fusion[J]. IEEE Transactions on Image Processing, 2021, 30: 4070-4083. doi: 10.1109/TIP.2021.3069339 [10] ZHANG H, LE Z, SHAO Z, et al. MFF-GAN: an unsupervised generative adversarial network with adaptive and gradient joint constraints for multi-focus image fusion[J]. Information Fusion, 2021, 66: 40-53. doi: 10.1016/j.inffus.2020.08.022 [11] MA J, LE Z, TIAN X, et al. SMFuse: multi-focus image fusion via self-supervised mask-optimization[J]. IEEE Transactions on Computational Imaging, 2021, 7: 309-320. doi: 10.1109/TCI.2021.3063872 [12] WANG B, ZHAO Q, BAI G, et al. LIALFP: multi-band images synchronous fusion model based on latent information association and local feature preserving[J]. Infrared Physics & Technology, 2022, 120: 103975. [13] NIE R, MA C, CAO J, et al. A total variation with joint norms for infrared and visible image fusion[J]. IEEE Transactions on Multimedia, 2021, 24: 1460-1472. [14] MA J, CHEN C, LI C, et al. Infrared and visible image fusion via gradient transfer and total variation minimization[J]. Information Fusion, 2016, 31: 100-109. doi: 10.1016/j.inffus.2016.02.001 [15] MA Y, CHEN J, CHEN C, et al. Infrared and visible image fusion using total variation model[J]. Neurocomputing, 2016, 202: 12-19. doi: 10.1016/j.neucom.2016.03.009 [16] WANG B, BAI G, LIN S, et al. A novel synchronized fusion model for multi-band images[J]. IEEE Access, 2019, 7: 139196-139211. doi: 10.1109/ACCESS.2019.2943660 [17] Ng M K, WANG F, YUAN X, et al. Inexact alternating direction methods for image recovery[J]. SIAM Journal on Scientific Computing, 2011, 33(4): 1643-1668. doi: 10.1137/100807697 [18] Moonon A U, HU J. Multi-focus image fusion based on NSCT and NSST[J]. Sensing and Imaging, 2015, 16(1): 1-16. doi: 10.1007/s11220-014-0103-y [19] Bavirisetti D P, Dhuli R. Fusion of infrared and visible sensor images based on anisotropic diffusion and Karhunen-Loeve transform[J]. IEEE Sensors Journal, 2015, 16(1): 203-209. [20] FU Y, WU X J. A dual-branch network for infrared and visible image fusion[C]//25th International Conference on Pattern Recognition (ICPR) of IEEE, 2021: 10675-10680. [21] XU H, MA J, JIANG J, et al. U2Fusion: A unified unsupervised image fusion network[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 44(1): 502-518. [22] MA J, YU W, LIANG P, et al. FusionGAN: a generative adversarial network for infrared and visible image fusion[J]. Information Fusion, 2019, 48: 11-26. doi: 10.1016/j.inffus.2018.09.004 -

下载:

下载: